The integration of Generative AI (GenAI) into enterprise workflows represents a monumental leap in productivity. Tools like Google’s Gemini are at the forefront of this transformation, offering advanced capabilities for content creation, data analysis, and complex problem-solving. However, this power introduces new and significant security challenges. The potential for a Gemini data breach is a top concern for security analysts and CISOs, who are now tasked with protecting corporate assets in an expanded digital ecosystem. Understanding the mechanics of how such a breach could occur is the first step toward building a resilient defense.

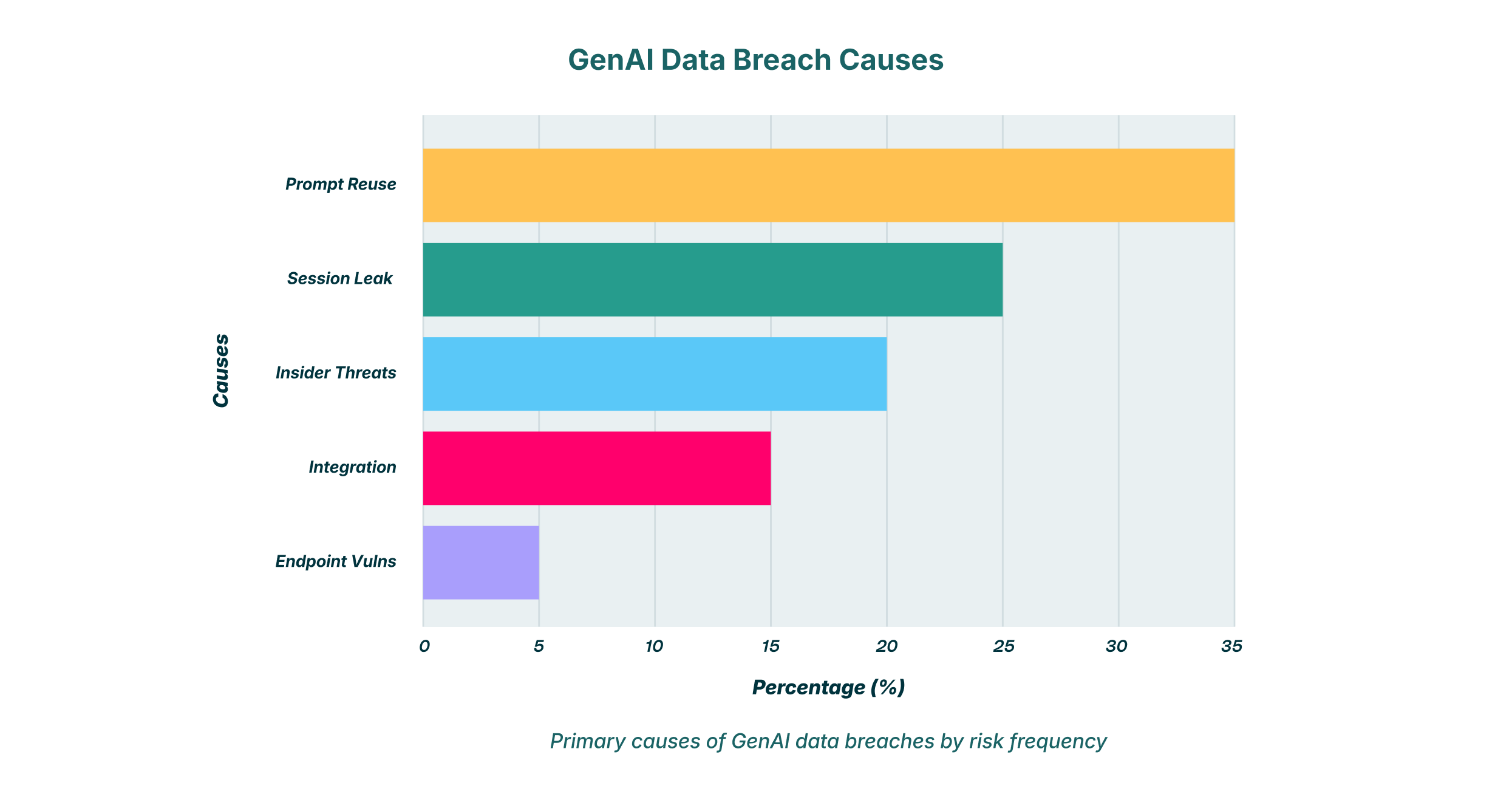

This article investigates the incidents and vulnerabilities tied to Gemini’s use in the enterprise, focusing on causes like prompt reuse and session leak vulnerabilities. We will analyze the potential impact of an AI breach and outline the critical lessons for organizations aiming to harness GenAI’s benefits without succumbing to its risks. The central question we explore is not just if a Gemini data leak can happen, but what proactive measures, including robust DLP and access controls, can be implemented to prevent it.

The Anatomy of a Gemini Security Breach

A gemini security breach doesn’t have to be a cataclysmic event orchestrated by a sophisticated external attacker. More often, it’s a quiet, slow leak of sensitive information originating from legitimate, albeit risky, user behavior. The primary threat vector is the interaction between an employee and the GenAI platform itself. Every prompt entered and every file uploaded is a potential point of data exposure.

Imagine a marketing manager using Gemini to summarize notes from a confidential M&A strategy meeting. They paste the entire meeting transcript into the prompt, asking the AI to distill key action items. In that moment, sensitive details about financials, personnel, and corporate strategy are transferred to a third-party cloud environment. Without proper controls, this data could be used to train the model, be retained in conversation logs, or potentially be exposed through a platform vulnerability.

Several key vulnerabilities can lead to a significant data exposure event when employees use GenAI tools like Gemini.

Prompt Reuse and Model Training

One of the most discussed risks is prompt reuse, where data submitted by users is incorporated into the LLM’s training dataset. While major providers like Google have policies against using API-submitted data for training their public models, the policies for public, consumer-facing versions of these tools can be different. An employee using a personal Gemini account on a corporate device could inadvertently contribute sensitive information to the model’s knowledge base. This information could then theoretically surface in another user’s query, leading to an unpredictable and irreversible gemini data leak.

Session Leak and Hijacking

A session leak is a more technical but equally dangerous vulnerability. If an employee’s session with Gemini is compromised, through a malicious browser extension, an unsecure Wi-Fi network, or a phishing attack, an attacker could gain access to the entire conversation history. This history could contain a treasure trove of sensitive data shared over weeks or months. The ease with which modern malware can perform session hijacking makes this a critical threat vector for any web-based application, including GenAI platforms.

Insider Threats and Unsanctioned Use

The risk is not always external. A disgruntled employee could intentionally exfiltrate data by feeding it into Gemini and then accessing it from a personal device. More commonly, the threat is accidental. A well-meaning employee, unaware of the risks, might use Gemini for tasks involving personally identifiable information (PII), intellectual property, or source code, creating a shadow IT problem that traditional security tools cannot see or control. This unsanctioned use of GenAI is a primary driver behind the modern AI breach.

The Critical Question: Does Gemini Leak Data?

So, does Gemini leak data? The answer is nuanced. By design, Gemini has robust security controls, and Google invests heavily in protecting its infrastructure. The platform itself is not inherently “leaky.” However, the risk of a leak is fundamentally tied to its usage. Without purpose-built security overlays, any powerful tool can be misused. The primary channels for a data leak are:

- User Input: Employees pasting sensitive text or uploading confidential documents.

- Integration Risks: Insecure connections between Gemini APIs and other corporate applications.

- Endpoint Vulnerabilities: Compromised browsers or devices that expose user sessions to attackers.

Therefore, the responsibility for preventing a gemini data leak is shared. While the provider secures the platform, the enterprise must secure how its employees and systems interact with it.

Prevention and Mitigation: A Modern Approach to AI Security

Preventing a Gemini security breach requires moving beyond traditional network-based security and implementing solutions that provide visibility and control directly at the point of interaction: the browser. This is where a Browser Detection and Response (BDR) platform, like LayerX’s Enterprise Browser Extension, becomes essential.

A critical step is to deploy a DLP solution that understands the context of GenAI interactions. Legacy DLP tools often struggle with web traffic and APIs. A modern solution should be capable of:

- Monitoring Prompts: Analyzing the content of prompts in real-time to detect and block the submission of sensitive data, such as PII, financial information, or keywords from a confidential project.

- Controlling File Uploads: Preventing employees from uploading sensitive documents to Gemini.

- Enforcing Policy: Applying risk-based guardrails that might, for example, allow general queries but block the submission of any content matching a predefined data pattern.

Mitigate Session-Based Risks

To combat the threat of a session leak, security teams need visibility into browser activity. An enterprise browser extension can identify and block malicious extensions that attempt to hijack sessions, alert on suspicious script activity within the Gemini tab, and ensure that all interactions occur within a monitored and secure environment. This provides a crucial layer of defense against endpoint-based attacks targeting GenAI tools.

Discover and Secure Shadow AI

Employees will inevitably use a mix of sanctioned and unsanctioned AI tools. A comprehensive security strategy must include the discovery of this “shadow AI” usage. By monitoring all browser activity, organizations can identify which employees are using Gemini (and other GenAI tools), whether they are using corporate or personal accounts, and what kind of risk this usage introduces. This visibility allows IT and security teams to enforce consistent policies across all applications, sanctioned or not.

Lessons Learned for a Secure GenAI Future

The rise of tools like Gemini does not need to be a source of security anxiety. By embracing a modern, user-focused security strategy, organizations can foster innovation while protecting their most valuable assets. The key lessons are clear:

- Assume User-Driven Risk: The primary threat of a data exposure event comes from user actions. Security strategies must focus on monitoring and controlling the user-to-app interaction.

- Context is Everything: Security tools must understand the difference between a benign query and a high-risk data submission. Context-aware DLP is non-negotiable for securing GenAI.

- The Browser is the New Endpoint: As applications move to the web, the browser has become the central point of risk and control. Securing the browser is securing the enterprise.

Preventing an AI breach is about enabling employees to use powerful tools safely. It requires a strategic shift from blocking access to managing usage with intelligent, granular controls. With the right approach, organizations can confidently embrace the future of work, powered by GenAI and secured by a proactive and adaptive defense.