The rapid adoption of web-based AI and GenAI tools has unlocked unprecedented productivity for enterprises. From code generation to market analysis, these platforms are becoming integral to daily operations. However, this reliance introduces a new and significant attack surface: the user’s browser session. An AI session hijack is no longer a theoretical threat but a practical method for attackers to gain unauthorized access to sensitive corporate data. The very mechanisms that create a seamless user experience, session cookies, tokens, and browser caching, can be exploited to take over an active AI session, leading to severe GenAI session exposure and data leakage.

Imagine an employee using a powerful GenAI platform to summarize a confidential internal report. The session, authenticated and trusted, contains the context of this sensitive data. If an attacker can hijack this session, they gain a direct window into that conversation, able to exfiltrate the data or even manipulate the AI’s output. This article explores the mechanics behind these attacks, from the theft of a session token to sophisticated techniques like indirect prompt injection, and outlines the critical risks they pose to the modern enterprise.

The Anatomy of an AI Session Hijack

At its core, session hijacking is a form of identity theft. When a user logs into a web application, the server creates a unique session to track their activity and maintain their authenticated state. This is typically managed through a session cookie or a session token stored within the browser. This token acts as a temporary key, proving the user’s identity for the duration of the session without requiring them to re-enter their credentials for every action.

An attacker’s goal is to steal this key. By obtaining a valid session token, they can present it to the web server and effectively impersonate the legitimate user. The server, seeing a valid token, grants the attacker the same access and privileges as the user, allowing them to view, modify, or exfiltrate any data accessible within that session. For web-based AI tools, this could include proprietary code, financial projections, customer PII, or strategic plans that employees are processing.

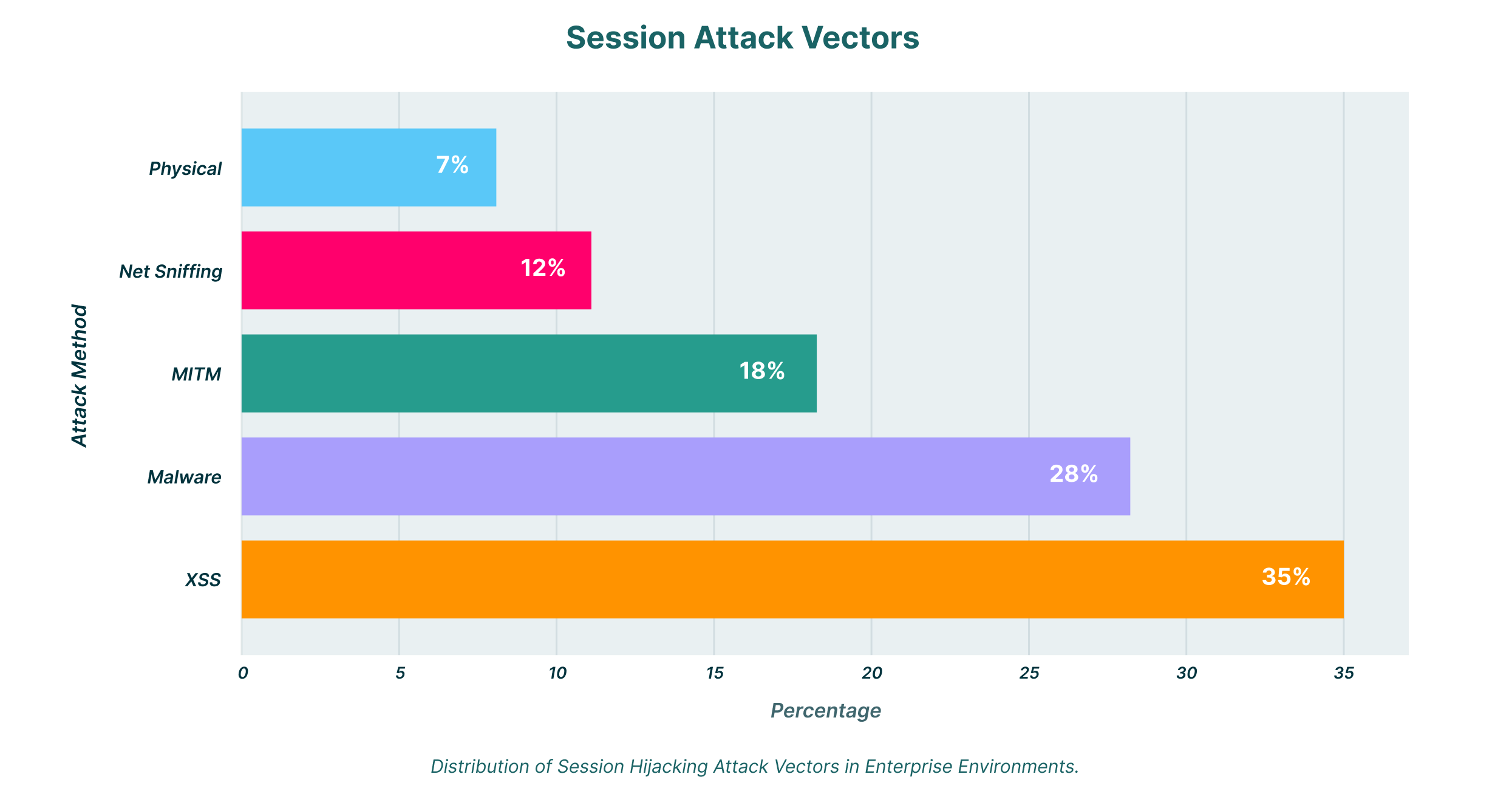

The methods for stealing these tokens are varied. They range from cross-site scripting (XSS) attacks that trick the browser into sending the cookie to an attacker, to man-in-the-middle attacks that intercept traffic between the user and the server. However, with the rise of AI, even more sophisticated vectors are emerging.

Advanced Attack Vectors: Indirect Prompt Injection and Malware

The interactive nature of GenAI platforms opens the door for novel attack techniques. One of the most concerning is indirect prompt injection. Unlike a direct attack where a malicious user inputs a harmful prompt, an indirect injection occurs when the AI model processes a compromised data source.

Consider an AI tool asked to summarize a webpage or a document that has been tampered with by an attacker. The attacker can embed a malicious prompt within that external data. When the AI processes it, the hidden command executes within the context of the user’s session. This malicious prompt could be designed to instruct the AI to reveal its own session context, including the session token, and send it to an attacker’s remote server. The user would be completely unaware that their session had just been compromised.

This attack is particularly dangerous because it doesn’t require compromising the user’s machine directly. It exploits the trust between the AI application and the data it consumes.

The threat is compounded by the persistent danger of malware. Modern info-stealer malware is often designed specifically to harvest credentials and session data from browsers. This malware can be delivered through phishing emails, malicious downloads, or even as a zero-click exploit, which requires no user interaction whatsoever to infect a system. Once active, it can scrape browser databases and local storage, searching for valuable session cookies and tokens from high-value targets like enterprise AI platforms. The stolen data is then quietly exfiltrated to a remote server controlled by the attacker, who can then use the tokens to launch an AI session hijack.

The Role of Caching and the Illusion of MFA Security

Browser caching is designed to improve performance by storing frequently accessed data locally. While beneficial for user experience, it can create another security vulnerability. Sensitive information from AI interactions, including snippets of data or session identifiers, might be stored in the cache. If an attacker gains access to the local file system, either physically or through malware, they can parse this cached data to reconstruct sessions or steal valuable information.

Many organizations rely on Multi-Factor Authentication (MFA) as a primary defense against unauthorized access. While MFA is a critical security layer, it is not a silver bullet against session hijacking. The key thing to understand is that session hijacking attacks typically occur after a user has successfully authenticated. The attacker isn’t trying to steal a password and bypass the MFA prompt; they are stealing the session token that is generated after the MFA challenge has been completed. Because the stolen token represents an already authenticated session, the attacker can often bypass MFA entirely.

The risks of an AI session hijack are magnified in an enterprise environment, particularly with the rise of “Shadow IT” or, more specifically, “Shadow AI.” When employees use unapproved, third-party AI tools for work-related tasks, they operate outside the visibility and control of the IT and security teams. This creates two significant problems, as highlighted by the challenges in GenAI and SaaS security.

First, the organization has no way to enforce security governance on these platforms. There is no assurance that these tools meet enterprise security standards, making them prime targets for attackers. Second, it leads to a massive GenAI session exposure footprint. Sensitive corporate data, intellectual property, customer lists, financial data, is fed into these unsanctioned applications. A successful session hijacking of a single employee’s account on one of these platforms could lead to a catastrophic data breach. This is the primary channel for inadvertent or malicious data leakage in the modern enterprise.

Without a comprehensive audit of all SaaS and AI applications being used, organizations are flying blind. They cannot protect against threats they cannot see, and the ease of access to web-based GenAI tools means the attack surface is growing daily.

Mitigating AI Session Hijacking with a Modern Security Approach

Protecting against AI session hijack requires a strategic shift away from traditional network-based security and toward a model that provides deep visibility and control over browser activity. Since these attacks target the browser session itself, the solution must operate at the browser level.

Enterprises need the ability to monitor all SaaS usage in real-time, sanctioned and unsanctioned alike. A robust security solution should be able to detect and block suspicious activities indicative of a hijack attempt, such as a session token being accessed by an unusual process or exfiltrated to a suspicious remote server. It should also be capable of identifying the use of “Shadow AI” tools and applying risk-based policies to prevent employees from entering sensitive data into them.

By focusing on the browser as the new security perimeter, organizations can enforce granular controls. For instance, they can prevent the pasting of sensitive data into unapproved AI chat windows or block file uploads to non-corporate file-sharing sites. This approach directly addresses the core risks of session hijacking, indirect prompt injection, and data exfiltration, providing a resilient defense against these modern threats.

In conclusion, while web-based AI tools offer immense benefits, they introduce complex security challenges that cannot be ignored. The threat of an AI session hijack is real and potent, capable of bypassing traditional defenses like MFA and leading to significant data breaches. By understanding the mechanisms of these attacks, from the theft of a session cookie to the deployment of sophisticated malware and indirect prompt injection, organizations can begin to formulate a more effective defense strategy. A proactive approach, centered on browser-level visibility and control, is essential to safely harnessing the power of AI without compromising enterprise security.