AI technology went from an experimental toy to a workplace staple in under three years. That much we all knew. What nobody expected was how fast it would surpass traditional SaaS categories not only in adoption, but in risk.

Our new Enterprise AI and SaaS Data Security Report 2025, based on real-world browsing telemetry from LayerX’s enterprise customers—shows something surprising: AI is no longer an “emerging” technology. It is now the single largest blind spot for data exfiltration in the modern enterprise.

And unlike email or file sharing, where security teams have built years of governance, the majority of AI usage is happening in the shadows; outside SSO, outside visibility, and outside enterprise control.

AI Adoption at Breakneck Speed

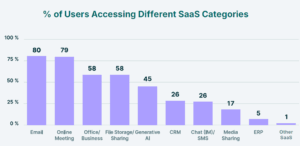

Let’s start with the headline: 45% of enterprise employees are already using generative AI tools. That’s nearly half the workforce, in less than three years since these tools appeared. By comparison, it took SaaS categories like file sharing or video conferencing over a decade to reach similar penetration.

Even more striking: 92% of all enterprise AI usage is concentrated in a single tool—ChatGPT. This makes it not just the fastest-growing category, but also the most concentrated one. A single, consumer-facing platform has become the de facto enterprise AI standard.

On its own, adoption at this scale is not the surprise. The shock comes when you look at how employees are using it.

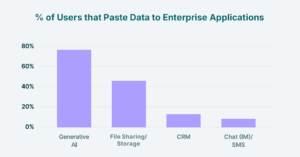

The Surprising Data Path: Copy/Paste, Not Uploads

Most CISOs worry about file uploads. And for good reason: 40% of files uploaded to GenAI tools contain sensitive PII or PCI data. That’s almost half of all uploads.

But the real shock lies elsewhere. Our data shows that the majority of sensitive data doesn’t leave the enterprise via uploads at all. It leaves through copy/paste.

- 77% of employees paste data into GenAI prompts

- 82% of those pastes come from personal accounts

- On average, employees make 14 pastes/day into non-corporate accounts, and at least 3 of those paste activities contain sensitive data

This turns the humble clipboard, an often overlooked fileless and unstructured channel, into the #1 vector for sensitive data exfiltration. GenAI tools alone account for 32% of all corporate-to-personal data transfers.

Traditional DLP tools, built around file-centric monitoring, don’t even register this activity. Which means enterprises aren’t just behind on AI governance—they’re blind.

Personal Accounts Are Running the Show

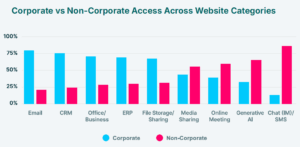

Enterprises have invested millions into identity security, federation, and SSO. Yet, according to our data, those controls are barely touching the AI category.

- 67% of AI usage happens through unmanaged personal accounts

- 71% of CRM logins and 83% of ERP logins are non-federated

- Even “corporate” accounts are often password-only, leaving them functionally indistinguishable from personal logins

The takeaway? Corporate ≠ secure. The systems housing the most sensitive customer and financial data, such as CRM, ERP, and now AI, are being accessed as if they were consumer apps. Identity controls exist, but employees simply work around them.

Governance: Nowhere to Be Found

The most counterintuitive finding from our research is this: the more embedded AI becomes in enterprise workflows, the less governance exists around it.

Employees paste sensitive data into prompts. They upload files into consumer-grade accounts. They log in with unmanaged identities. And all of this is happening outside sanctioned oversight.

Contrast this with email, where decades of DLP, archiving, and federation have built at least some form of guardrails. AI, by comparison, is the Wild West.

And yet, AI is no longer “emerging.” It already accounts for 11% of all enterprise activity, rivalling categories that have defined enterprise productivity for decades. Enterprises are running blind in their fastest-growing category.

What CISOs Should Do Next

Based on this data, here are the three most urgent priorities:

- Treat AI as a first-class enterprise category.

GenAI is no longer an experiment. ChatGPT alone reaches 43% enterprise penetration, matching Zoom’s adoption in a fraction of the time. With 45% of employees actively using AI tools and AI representing 11% of all enterprise browsing activity, GenAI must now be governed with the same rigor as email and file sharing systems. The experimental phase is over. It deserves the same governance and controls as email or file storage, with monitoring for both uploads and file-less actions like copy/paste. - Treat Non-Federated Corporate Logins as Active Shadow IT

The research reveals that even when employees use corporate credentials for critical apps like ERP (83% non-SSO) and CRM (71% non-SSO), the lack of SSO federation makes these logins functionally identical to unmanaged personal accounts.So instead of just prioritizing SSO for new SaaS apps, immediately re-classify all non-federated accounts in critical systems as unmanaged shadow IT. Your immediate action should be to run an audit focused specifically on your ERP and CRM systems to identify all non-federated access points. Treat the remediation of these gaps with the same urgency as a publicly exposed server, as they represent an invisible and uncontrolled pathway for data access and exfiltration. - Implement Data-Aware Policies Instead of Blocking Personal Account Access

A significant portion of uploads and pastes to GenAI (67% personal accounts) and IM (87% personal accounts) tools occurs via non-corporate accounts. Simply blocking access to these services is often impractical and leads to shadow IT.Therefore, move from a binary “block/allow” posture to a context-aware, data-centric policy. Allow employees to access personal instances of ChatGPT, Google Drive, or WhatsApp Web, but deploy browser-level controls that specifically prevent sensitive corporate data (identified by classifiers) from being pasted or uploaded into them. This approach acknowledges the reality of modern workflows while creating a crucial security boundary around the data itself, not the application. - Shift DLP Budgets from Network/File Analysis to Browser-Level Paste Control:The findings show that copy/paste has surpassed file uploads as the primary data exfiltration channel, with 77% of employees pasting data into GenAI tools and 62% of pastes into IM apps containing PII/PCI. Traditional network-based DLP solutions are blind to this file-less data movement.As a result, organizations should pivot their data protection strategy and budget allocation away from file-centric monitoring. Prioritize the deployment of browser-native security solutions or secure enterprise browsers that can inspect, classify, and block sensitive data within copy/paste events before it is submitted to a web form, AI prompt, or chat window. The key is to control the data at the point of action—the browser—not just the data in transit.

Why LayerX Data Is Different

Whereas other reports on AI adoption rely on surveys or self-reported data. Ours is built on direct browser telemetry from enterprise users themselves. That means no guesswork—just visibility into real-world activity across sanctioned, unsanctioned, and shadow apps.

This unique vantage point reveals what surveys can’t: that copy/paste, not uploads, is the primary exfiltration channel; that personal accounts dominate even business-critical workflows; and that AI isn’t just a risk on the horizon—it’s the risk happening right now.

The Bottom Line

CISOs have spent years building governance around email, file storage, and sanctioned SaaS. Meanwhile, AI has become the fastest-growing enterprise category, with almost half of employees using it daily. And almost none of it is secure.

That’s the paradox our data uncovers: the more indispensable AI becomes, the less oversight it has.

The clipboard is now the new frontier of enterprise data leaks. ChatGPT has become the de facto AI enterprise standard. And unmanaged accounts are quietly rewriting the rules of identity.

The challenge for security leaders isn’t whether to govern AI. It’s how quickly they can bring it under control—before the invisible trickle of data leakage turns into a flood.

Download the full report to uncover the full scale of AI and SaaS data risks.