LayerX Labs has identified a novel attack vector for malicious actors to try and extract sensitive data from unsuspecting users: GPT Masquareding Attacks.

In this attack, hackers impersonate legitimate GPTs hosted on OpenAI’s ChatGPT platform in order to lure users to fake versions of those GPTs and send the data that users share with the GPT to a remote server, and in that way extract sensitive data.

Artificial Intelligence (AI) tools have transformed the way we interact with technology, offering innovative solutions across various domains, from healthcare to finance. These tools enhance efficiency, automate tasks, and provide insights that were previously unattainable. Among the most notable advancements in AI are Generative Pre-trained Transformers (GPTs), which are at the forefront of natural language processing.

What Are GPTs?

OpenAI recently added new feature for creating custom GTPs on their platform, and opening them up to OpenAI users, similar to an application marketplace.

Generative Pre-trained Transformers (GPTs) are advanced AI models designed to understand and generate human language. Developed by OpenAI, they are trained on extensive datasets that encompass a wide range of topics, enabling them to produce coherent and contextually relevant text based on user prompts. By analyzing language patterns, GPTs can engage in conversations, answer questions, and create content that mirrors human communication.

The versatility of GPTs allows them to be applied in numerous ways, including chatbots for customer service, content creation for blogs and articles, language translation, and even coding assistance. As these models continue to evolve, they are becoming more adept at understanding context and nuances in language, making them invaluable tools in both personal and professional settings.

However, the productivity benefits provided by custom GPTs are the reason why users may fall for GPT Masquareding Attacks.

Cybercriminals’ Playbook: Exploiting GPTs

As the capabilities of Generative Pre-trained Transformers (GPTs) continue to expand, so too does the potential for cybercriminals to exploit these technologies for malicious purposes. One significant attack vector identified by LayerX Labs – and dubbed as GPT Masquareing Attacks – is the ability of malicious actors to create identical or deceptively similar versions of legitimate GPT models, in order to lure legitimate users into sharing sensitive information.

By replicating these models, they can manipulate users into believing they are interacting with a trusted AI, thereby gaining unauthorized access to sensitive data and information.

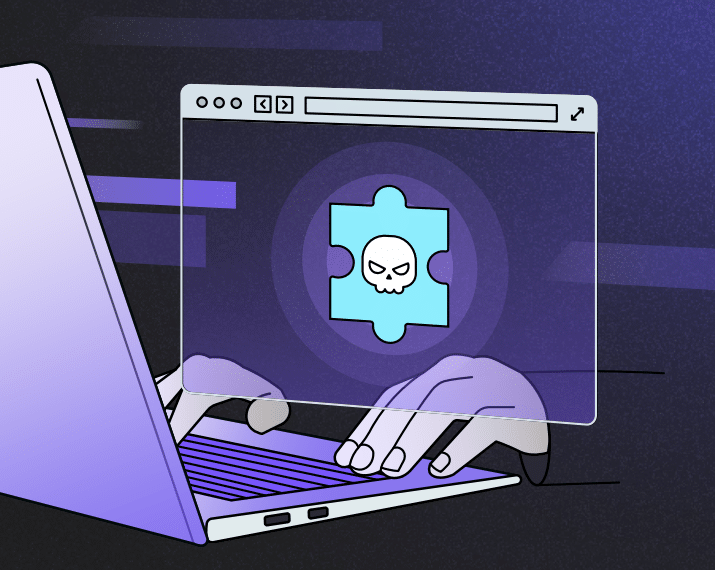

How Attackers Create Masquareding GPTs:

- Identify an existing, legitimate GPT which they want to replicate. This can be either a GPT from a known brand, or a particularly popular or useful tool that many users may be interested in using.

- Create a similar-looking GPT with the same name, icon and description. To an outside observer, this GPT will appear completely identical to the original (legitimate) GPT.

- Wait for users to search for the legitimate GPT. Distinguishing between the different GPTs is very difficult, and users can easily click into the masquareding GPT.

- Send information inputted or uploaded in the GPT to an outside server. Users will be prompted to approve sharing information with an outside server, but if they are expecting this type of behavior (or don’t know better), they will have no control over where this data is being sent.

- Once information is sent to a 3rd-party server, it is out of the users’ hands and in the hands of attackers. While most information will probably be benign, it can contain sensitive information such as proprietary source code, customer information for analysis, and more.

Cybercriminals can set up fake platforms or applications that appear to use a legitimate GPT. By mimicking the interface and functionalities of a well-known AI service, they can lure unsuspecting users into sharing personal information or credentials. Once users engage with these counterfeit models, any data entered, such as login credentials, payment information, or private messages, can be transferred to the criminals’ servers. This creates a significant risk, as users may not realize they have fallen victim to a scam until it’s too late.

Moreover, these fraudulent GPTs can be designed to engage users in ways that encourage further data disclosure. For instance, they might simulate customer support interactions, convincing users to provide more detailed personal information under the guise of resolving issues. The ability of GPTs to generate human-like text makes it challenging for users to discern between legitimate and malicious interactions, thereby amplifying the risk of data theft.

The Threat Within the Organization

GPTs have become essential tools for developers, aiding in coding, debugging, and documentation. As a result, fraudulent versions of these GPTs can significantly harm organizations both financially and reputationally by targeting developers to extract sensitive internal code.

These malicious GPTs can be designed to closely resemble trusted development tools, making it easy for unsuspecting developers to be misled. For instance, an attacker could deploy a counterfeit AI assistant that appears to function similarly to an established coding helper, enticing developers to interact with it. Once engaged, these fraudulent models can prompt users to share code snippets or proprietary algorithms under the guise of improving functionality or fixing bugs.

Below is an example of creating a Masquerading GPT impersonating the Code CoPilot GPT:

The risk is particularly acute in collaborative environments where developers frequently seek assistance from AI tools for complex tasks: When a developer unknowingly submits sensitive internal code to a fraudulent GPT, the attacker gains access to critical intellectual property and potentially exploitable vulnerabilities within the organization’s software. This not only jeopardizes the company’s competitive edge but also increases the likelihood of further cyberattacks.

Furthermore, cybercriminals can automate the distribution of these fake GPTs, targeting multiple organizations and developers simultaneously. By crafting messages that highlight the benefits of using their counterfeit tools, they can effectively lure in developers who are eager for support. The sophisticated nature of these AI models allows them to generate responses that appear credible and relevant, further blurring the lines between legitimate assistance and malicious intent.

Conclusion

The rise of GPTs has undoubtedly transformed how we interact with technology, enhancing productivity and efficiency across various domains. However, this advancement also comes with significant risks, particularly as cybercriminals exploit these tools for malicious purposes. By creating fraudulent versions of GPTs that mimic legitimate ones, attackers can deceive users and organizations, leading to data theft, financial loss, and reputational damage.

To address these risks, LayerX offers several key mitigation strategies tailored to protect organizations from unwanted interactions with AI models:

- Our Unique Browser Security Solution – Implement LayerX’s browser security solution to monitor and filter web interactions. These tools can detect fraudulent sites and alert users before they engage with malicious GPTs.

- Access Controls – Use LayerX’s access management features to restrict employees from using unauthorized AI tools. By controlling which applications can be accessed through company networks, organizations can reduce the risk of exposure to counterfeit models.

- Behavioral Analysis – Leverage LayerX’s behavioral analysis capabilities to identify unusual patterns of interaction with AI tools. This can help detect potential phishing attempts or unauthorized requests for sensitive code.

- Real-time Alerts – Set up real-time alerts to notify security teams of any suspicious activity related to AI interactions. This proactive approach will allow your organization to respond quickly to potential threats.

By integrating these strategies into your security framework, your organization can better safeguard against the exploitation of AI technologies. As GPT technology continues to evolve, leveraging specialized solutions from LayerX will be essential in maintaining a secure environment and protecting sensitive information.