A New Vector for Prompt Injection Attacks That Threatens Both Commercial and Internal AI Tools

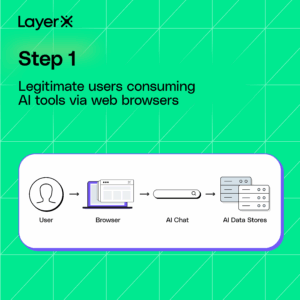

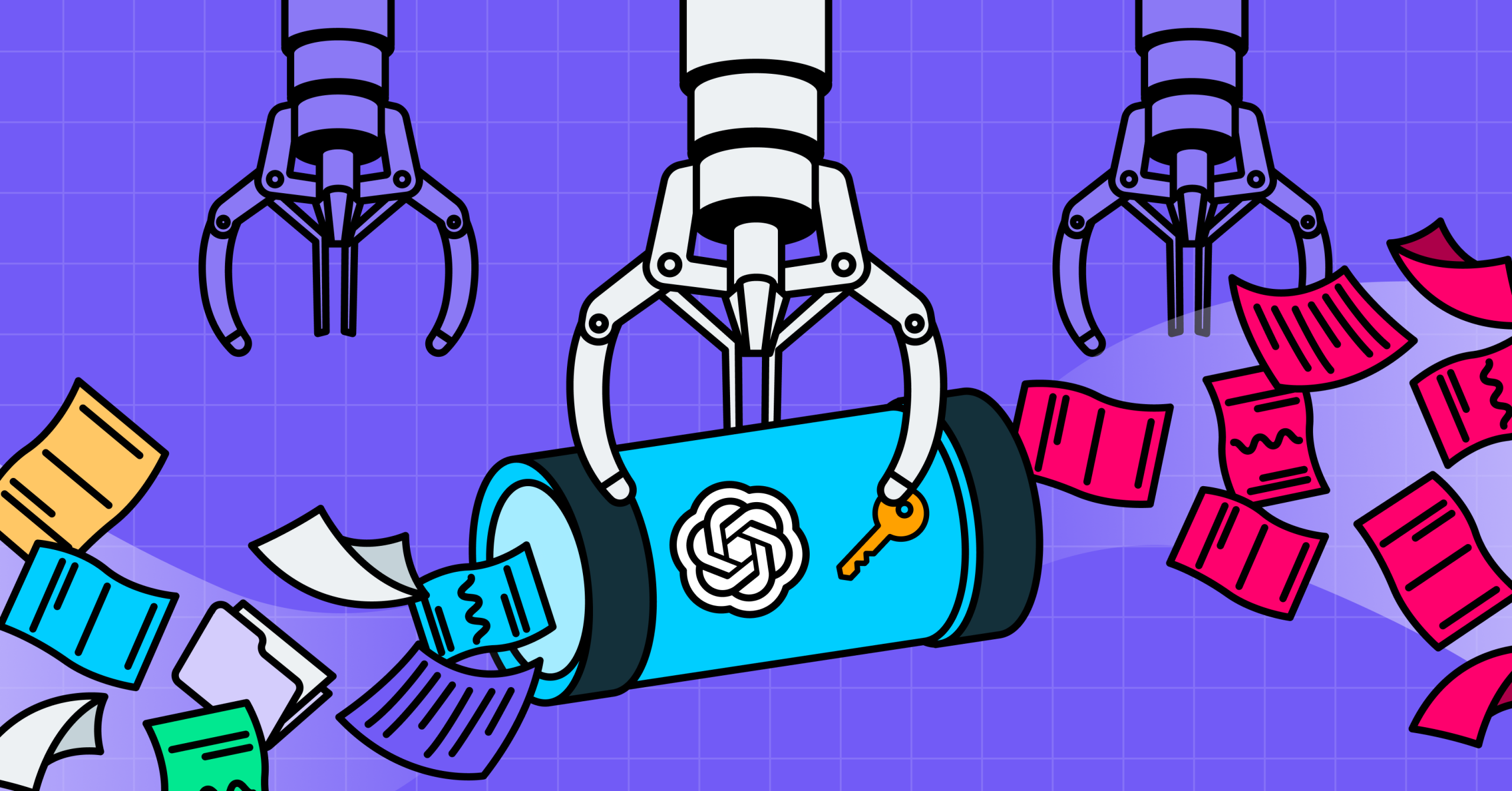

LayerX researchers have identified a new class of exploit that directly targets these tools through a previously overlooked vector: the browser extension. This means that practically any user or organization that have browser extensions installed on their browsers (as 99% of enterprise users do) are potentially exposed to this attack vector.

LayerX’s research shows that any browser extension, even without any special permissions, can access the prompts of both commercial and internal LLMs and inject them with prompts to steal data, exfiltrate it, and cover their tracks.

The exploit has been tested on all top commercial LLMs, with proof-of-concept demos provided for ChatGPT and Google Gemini.

The implication for organizations is that as they grow increasingly reliant on AI tools, that these LLMs – especially those trained with confidential company information – can be turned into ‘hacking copilots’ to steal sensitive corporate information.

The Exploit: Man in the Prompt

This exploit stems from the way most GenAI tools are implemented in the browser. When users interact with an LLM-based assistant, the prompt input field is typically part of the page’s Document Object Model (DOM). This means that any browser extension with scripting access to the DOM can read from, or write to, the AI prompt directly.

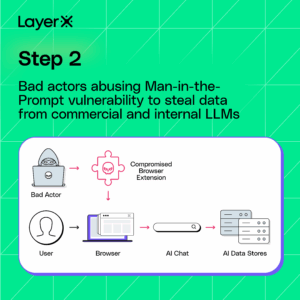

Bad actors can leverage malicious or compromised extensions to perform nefarious activities:

- Perform prompt injection attacks, altering the user’s input or inserting hidden instructions.

- Extract data directly from the prompt, response, or session.

- Compromise model integrity, tricking the LLM into revealing sensitive information or performing unintended actions.

Because of this tight integration between AI tools and browsers, LLMs inherit much of the browser’s risk surface. The exploit is effectively creates a man in the prompt.

Risk Is Compounded by Ubiquity of LLMs, Browser Extensions

The risk is amplified by two key factors:

- LLMs hold sensitive data. In commercial tools, users often paste proprietary or regulated content. Internal LLMs, meanwhile, are typically trained on confidential corporate datasets, giving them access to vast swaths of sensitive information, ranging from source code to legal documents to M&A strategy.

- Browser extensions have broad privileges. Many enterprise environments allow users to install extensions freely. Once a malicious or compromised extension is installed on a user’s browser, it can access any web-based GenAI tool the user interacts with.

If a user with access to an internal LLM has even a single vulnerable extension installed, attackers can silently exfiltrate data by injecting queries and reading the results, entirely within the bounds of the user’s session.

Every LLM, AI Application is Affected

- Third-party LLMs: Tools like ChatGPT, Claude, Gemini, Copilot, and others, which are accessed via web apps.

- Enterprise LLM deployments: Custom copilots, RAG-based search assistants, or any internal tool built with an LLM frontend served via browser.

- Users of AI-enabled SaaS Applications: Existing SaaS applications that enhance their capabilities by adding built-in AI integrations and LLMs, which can be used to query sensitive customer data stored on the application (such as user information, payment information, health records, and more).

- Any user with browser extension risk: Particularly those in technical, legal, HR, or leadership roles with access to privileged data.

| LLM | Vulnerable to Man-in-the-Prompt | Vulnerable to Injection via Bot | # of Monthly visits |

| ChatGPT | ✅ | ✅ | 5 billion |

| Gemini | ✅ | ✅ | 400 million |

| Copilot | ✅ | ✅ | 160 million |

| Claude | ✅ | ✅ | 115 million |

| Deepseek | ✅ | ✅ | 275 million |

| External LLM | ✅ | ❌ |

Proof-of-Concept #1: Turning ChatGPT into a Hacker’s Copilot

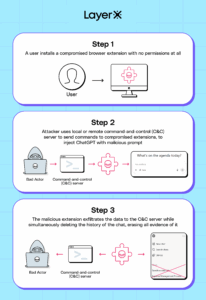

To demonstrate this exploit, LayerX researchers implemented a proof-of-concept extension that does not require any special permissions at all. Not only was our extension able to inject a prompt and query ChatGPT for information, but it was also able to exfiltrate the results and cover its tracks.

This means that any compromised extensinon can abuse this technique to steal data from users’ and enterprises’ ChatGPT.

How the ChatGPT Exploit Works:

- The user installs a compromised extension with no permissions at all.

- A command-and-control server (which can be hosted locally or remotely) sends a query to the extension.

- The extension opens a background tab and queries ChatGPT.

- The results are exfiltrated to an external log.

- The extension then deletes the chat, to erase its existence and cover up its tracks. If the user were to look at their ChatGPT history, they wouldn’t see anything.

Implications:

ChatGPT is the world’s most popular AI tool, with an estimated 5 billion visits per month. It is also frequently used by both individuals and organizations, for both personal and business purposes.

According to LayerX research, 99% of enterprise users have at least one browser extension installed in their browsers, and 53% have more than 10 extensions.

The fact that LayerX security researchers were able to create this exploit without any special permissions shows how practically any user is vulnerable to such an attack.

Any passive extension risk scoring will not be able to detect such an extension, since it does not require any permissions. Moreover, the fact that it does not require any permissions will lead to it receiving low risk scores.

Proof-of-Concept #2: Turning Google Gemini into an Evil Hacker Twin

As a second proof-of-concept to illustrate this vulnerability, LayerX implemented an exploit that can steal internal data from corporate environments using Google Gemini via its integration into Google Workspace.

Over the last few months, Google has rolled out new integrations of its Gemini AI into Google Workspace. Currently, this feature is available to organizations using Workspace and paying users.

This integration offers a new side panel in web applications such as Google Mail, Docs, Meet, and other apps, allowing users to automate repetitive and/or time-consuming tasks such as summarizing emails, asking questions about a document, aggregating data from different sources, etc.

One of the features that makes the Gemini integration unique is that it has access to all data accessible to the user in their Workspace. This includes email, documents (on Google Drive), shared folders, and contacts. An important distinction, however, is that Gemini can access not only files and data owned directly by the user, but any folder, file or data that has been shared with them and that they have access permissions for.

Google is already aware of exploitation attempts of its Gemini AI engine, and has extensively documented attempts to exploit Gemini for nefarious purposes. However, so far they have not addressed the risk of browser extensions being used as a vehicle for accessing users’ personal data via Gemini Workspace prompts, indicating that this is a novel method.

How the Gemini Exploit Works

The new Gemini integration is implemented directly within the page as added code on top of the existing page. It modifies and directly writes to the web application’s Document Object Model (DOM), giving it control and access to all functionality within the application.

Step 1: User uses a Google Workspace Pro account with Gemini integration

However, LayerX has found that the way this integration is implemented, any browser extension, without any special extension permissions, can interact with the prompt and inject prompts into it. As a result, practically any extension can access the Gemini sidebar prompt and query it for any data it desires.

Moreover, access persists even if the sidebar is closed or even if the extension actively manipulates the page code to hide the Gemini prompt interface.

Once extensions injects code into the prompt, it behaves like any other text query. Examples of actions it can take include:

- Extract email titles and contents

- Query information about people that appear in the user’s contact list

- List all accessible documents

- Structure complex queries to request specific data from accessible email and files

- Exploit the built-in autocomplete functionality to enumerate accessible files

- Add permutations to list all files and prompt results

- Etc.

Step 2: Once the sidebar is close, a compromised extension injects the Gemini prompt with a query, retrieves confidential user files, and exfiltrates information.

LayerX disclosed this vulnerability to Google under responsible disclosure measures.

What Data Hackers Can Access via Gemini Exploitation

Google’s Gemini Workspace integration can access any data that is accessible to the user. This means not only files and information owned by the user and stored in their directories, but also any files or data that were shared with them, and the user has read permissions to. This includes:

- Emails

- Contacts

- File contents

- Shared folders (and their contents)

- Meeting invites

- Meeting summaries

However, apart from directly accessing files and data accessible to the user, Gemini can be used to analyze data at scale without having to extract individual files. Sample queries it can be prompted to do include:

- List all customers

- Summaries of calls

- Information about people and contacts

- Look-up specific information (such as PII or other company intellectual property)

- And more…

Internal LLMs Are Particularly Exposed

While commercial AI tools like ChatGPT and Gemini are popular entry points for GenAI use, some of the most consequential targets for this exploit are internally deployed LLMs—those built and fine-tuned by enterprises to serve their own workforce.

Unlike public-facing models, internal LLMs are often trained or augmented with highly sensitive, proprietary organizational data:

- Intellectual property such as source code, design specs, and product roadmaps

- Legal documents, contracts, and M&A strategy

- Financial forecasts, PII, and regulated records

- Internal communications and HR data

The goal of these internal copilots or RAG-based systems is to empower employees to access this information faster and more intelligently. But that same convenience becomes a liability when browser-based access is coupled with invisible extension risk.

Why Internal LLMs Are Especially Vulnerable

- High-Trust Access: Internal models often assume trusted usage and are not hardened against adversarial input or silent automation from within the user’s browser session.

- Non-Restricted Queries: Users can often submit freeform questions and receive full responses, with few safeguards preventing extraction of confidential datasets, especially via cleverly crafted prompts.

- Assumed Network Safety: Because these LLMs are hosted within the organization’s infrastructure or behind a VPN, they’re mistakenly perceived as secure. But browser-level access breaks that boundary.

- Invisibility to Existing Tools: Traditional security solutions—such as CASBs, SWGs, or DLP—have no visibility into how DOM-level prompt manipulation occurs or what’s being queried and returned.

A Realistic Scenario

Consider a security analyst querying an internal LLM for past incident response timelines or a roadmap engineer reviewing future release notes. A malicious extension in the background could quietly inject a hidden query (“Summarize all unreleased product features mentioned in this session”) and forward the response to an external server—without triggering any security alert.

In essence, a single compromised browser on a trusted endpoint becomes an attacker’s conduit to exfiltrate high-value knowledge assets from the organization’s AI brain.

The Consequences

- IP Leakage: Proprietary algorithms, codebases, and trade secrets can be silently stolen.

- Regulatory Exposure: Queries involving customer PII, healthcare records, or financial data could lead to compliance violations under GDPR, HIPAA, or SOX.

- Erosion of Trust: The perceived safety of internal tools crumbles if sensitive answers leak via undetected channels.

Some Extensions on the Chrome Store Can Already Do This

In fact, some extensions on the Chrome Web Store already provide prompt injection and editing.

Extensions such as Prompt Archer, Prompt Manager, and PromptFolder all provide functionality that reads, stores, and writes to AI prompts.

While these extensions seem to be legitimate, they highlight how extensions that interact with AI prompts are valid and acceptable on the Chrome and Edge Stores. Moreover, most of these extensions require only limited permissions from users, underscoring how interaction with AI prompts can be done without any special permissions.

Implications for Enterprises

This threat exposes a serious blind spot in current GenAI governance efforts. Traditional security tools such as endpoint DLP, secure web gateways (SWGs), or CASBs, do not have visibility into the DOM-level interactions that enable this exploit. They cannot detect prompt injection, unauthorized data access, or the use of manipulated prompts.

Moreover, GenAI access policies (e.g., blocking ChatGPT via URL) provide no protection for internal tools hosted on whitelisted domains or IPs.

How to Mitigate This Risk:

Organizations need to shift their security thinking from application-level control to in-browser behavior inspection. This includes:

- Monitoring DOM interactions within GenAI tools and detecting listeners or webhooks that can interact with AI prompts.

- Blocking risky extensions based on behavioral risk, not just allowlists. Since a static assessment based on permissions will not suffice (since some extensions won’t require any permissions), combining publisher reputation with dynamic extension standboxing is the best way to detect risky and malicious extensions.

Preventing prompt tampering and exfiltration in real time at the browser layer.