The adoption of Generative AI is reshaping the enterprise. These powerful models offer unprecedented boosts in productivity, but this new capability comes with a significant trade-off: a new and complex attack surface. Organizations are discovering that enabling employees to use GenAI tools without proper oversight exposes them to critical risks, including the exfiltration of sensitive PII, intellectual property leakage, and compliance violations. Conducting a thorough AI risk assessment is the foundational step for any organization looking to harness the power of AI securely.

Many security leaders find themselves in a difficult position. How do you quantify the risks of an employee pasting proprietary code into a public LLM? What’s the real impact of a team relying on a “shadow AI” tool that hasn’t been vetted? This article provides a structured approach to answer these questions. We will explore a practical AI risk assessment framework, offer an actionable template, examine the types of tools needed for enforcement, and outline best practices for creating a sustainable AI governance program. A proactive generative AI risk assessment is no longer optional; it’s essential for secure innovation.

Why a Specialized AI Security Risk Assessment is Non-Negotiable

Traditional risk management frameworks were not designed for the unique challenges posed by Generative AI. The interactive, black-box nature of Large Language Models (LLMs) introduces dynamic threat vectors that legacy security solutions struggle to address. A specialized AI security risk assessment is crucial because the risks are fundamentally different and more fluid than those associated with conventional software.

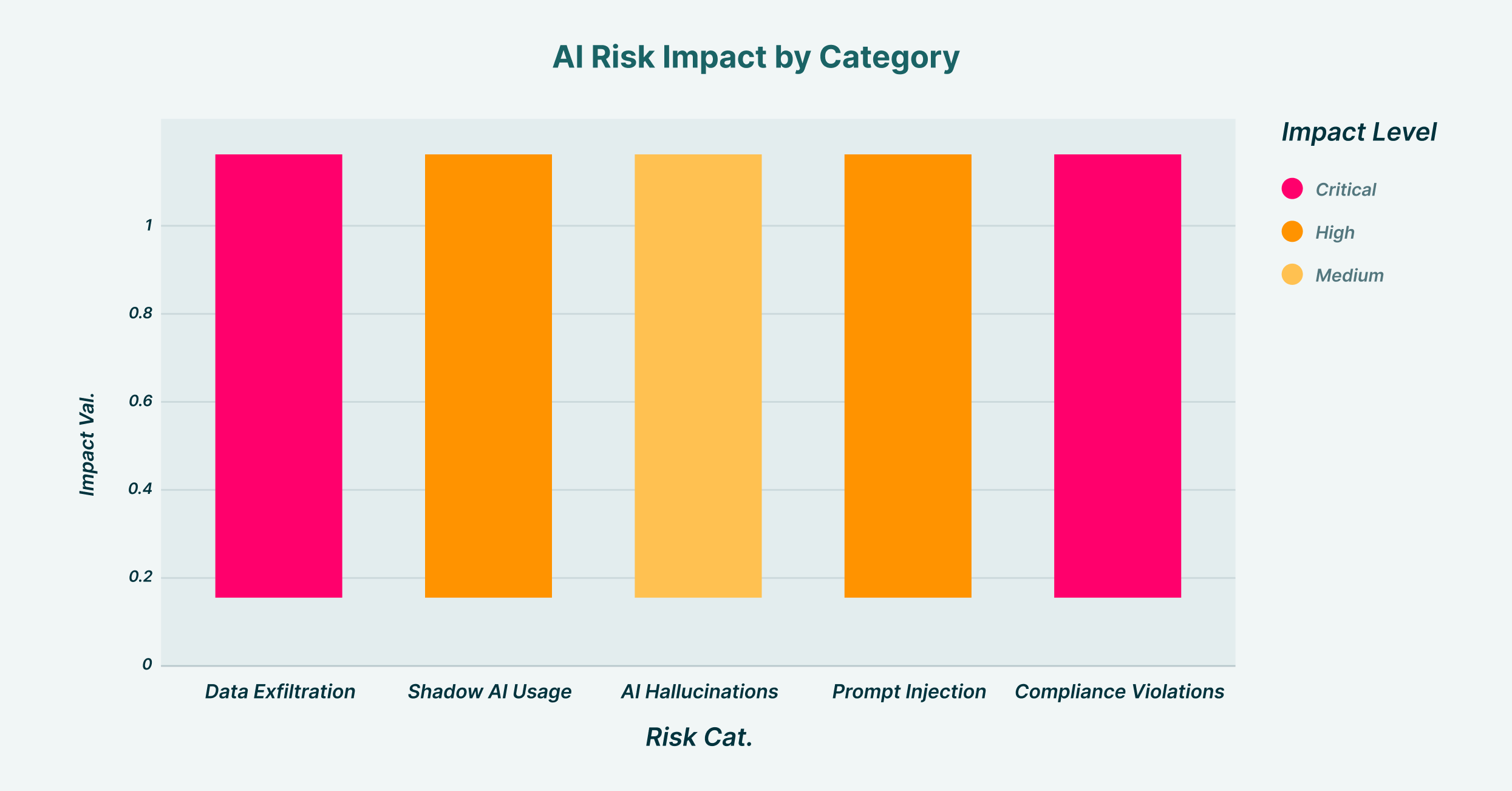

AI Risk Categories by Impact Level Assessment

The core challenges that necessitate a dedicated assessment include:

- Data Privacy and Exfiltration: This is arguably the most immediate and significant risk. Without proper controls, employees can easily copy and paste sensitive corporate data into public GenAI platforms. This could include customer lists, financial projections, unreleased source code, or M&A strategy documents. Once that data is submitted to a public LLM, the organization loses control over it, and it may be used to train future versions of the model.

- Shadow AI and Unsanctioned Usage: The accessibility of browser-based AI tools means any employee can start using a new application without IT’s knowledge or approval. This “Shadow SaaS” phenomenon creates massive security blind spots. An effective risk assessment AI strategy must begin with discovering and mapping all AI usage across the organization, not just the officially sanctioned tools.

- Inaccurate Outputs and “Hallucinations”: GenAI models can produce confident but entirely incorrect information. If an employee uses AI-generated code that contains a subtle flaw or bases a strategic decision on a fabricated data point, the consequences can be severe. This risk vector affects operational integrity and business continuity.

- Prompt Injection and Malicious Use: Threat actors are actively exploring ways to manipulate GenAI. Through carefully crafted prompts, an attacker could trick an AI tool into generating sophisticated phishing emails, malware, or disinformation. Imagine a scenario where a compromised employee account is used to interact with an internal AI assistant, instructing it to exfiltrate data by disguising it as a routine report.

- Compliance and Intellectual Property (IP) Risks: Navigating the legal landscape of AI is complex. Using a GenAI tool trained on copyrighted material could expose the organization to IP infringement claims. Furthermore, feeding customer data into an LLM without proper consent or security measures can lead to severe penalties under regulations like GDPR and CCPA.

Building Your AI Risk Assessment Framework

A haphazard approach to AI security is destined to fail. An AI risk assessment framework provides a systematic, repeatable process for identifying, analyzing, and mitigating GenAI-related threats. This structured approach ensures that all potential risks are considered and that controls are applied consistently across the organization.

A comprehensive framework should be built around five core stages:

- Inventory and Discovery: The first principle of security is visibility. You cannot protect what you cannot see. The initial step is to create a complete inventory of all GenAI applications and platforms being used by employees. This includes both company-sanctioned tools and the shadow AI services accessed directly through the browser. This stage is critical for understanding the true scope of your organization’s AI footprint.

- Risk Identification and Analysis: Once you have your inventory, the next step is to analyze each application to identify potential threats. For each tool, consider the types of data it can access and the ways it could be misused. For instance, an AI-powered code assistant has a different risk profile from an AI image generator. This analysis should be contextual, linking the tool to specific business processes and data sensitivities.

- Impact Assessment: After identifying risks, you must quantify their potential business impact. This involves evaluating the worst-case scenario for each risk across several vectors: financial (e.g., regulatory fines, incident response costs), reputational (e.g., loss of customer trust), operational (e.g., business disruption), and legal (e.g., litigation, IP infringement). Assigning an impact score (e.g., High, Medium, Low) helps prioritize which risks to address first.

- Control Design and Implementation: This is where risk assessment translates into action. Based on the risk analysis and impact assessment, you will design and implement specific security controls. These are not just policies on a shelf; they are technical guardrails enforced by technology. For GenAI, controls might include:

- Blocking access to high-risk, unvetted AI websites.

- Preventing the pasting of sensitive data patterns (like API keys, PII, or internal project codenames) into any GenAI prompt.

- Restricting file uploads to AI platforms.

- Enforcing read-only permissions to prevent data submission.

- Displaying real-time warning messages to educate users about risky actions.

- Monitoring and Continuous Review: The GenAI ecosystem evolves at an astonishing pace. New tools and new threats emerge weekly. An AI risk assessment is not a one-time project but a continuous lifecycle. Your framework must include provisions for ongoing monitoring of AI usage and regular reviews of your risk assessments and controls to ensure they remain effective.

Your Actionable AI Risk Assessment Template

To translate theory into practice, a standardized AI risk assessment template is an invaluable asset. It ensures that assessments are performed consistently across all departments and applications. While a simple spreadsheet can be a starting point, the goal is to create a living document that informs your security posture.

Here is a sample template that your cross-functional AI governance team can adapt and use.

| AI Application | Business Use Case | Data Sensitivity | Identified Risk(s) | Likelihood | Impact | Risk Score | Mitigation Controls | Residual Risk |

| Public ChatGPT-4 | General content creation, summarization | Public, Internal (Non-Sensitive) | Data exfiltration, Inaccurate outputs | High | Medium | High | Block paste of sensitive data patterns (e.g., PII, ‘Project Phoenix’), User training | Low |

| Unsanctioned PDF Analyzer | Summarizing external reports | Unknown, potentially Confidential | Shadow AI, Malware risk, Data leakage | Medium | High | High | Block application access entirely | N/A |

| GitHub Copilot | Code generation and assistance | Proprietary Source Code | IP leakage, Insecure code suggestions | High | High | Critical | Monitor activity, Prevent upload of key repository files, Code scanning | Medium |

| Sanctioned Internal LLM | Internal knowledge base queries | Internal, Confidential | Prompt injection, Insider threat | Low | Medium | Low | Role-based access control (RBAC), Audit logs | Low |

This template serves as a starting point for any generative AI risk assessment, forcing teams to think through the specific context of how each tool is used and what specific controls are necessary to reduce risk to an acceptable level.

From Manual Spreadsheets to a Dedicated AI Risk Assessment Tool

While a manual AI risk assessment template is a great first step, it has limitations. Spreadsheets are static, difficult to maintain at scale, and lack real-time enforcement capabilities. As your organization’s use of AI matures, you will need a dedicated AI risk assessment tool to move from a reactive to a proactive security posture. The market for AI risk tools is expanding, but not all are created equal.

When evaluating an AI risk assessment tool, consider these categories:

- SaaS Security Posture Management (SSPM): These tools are effective at discovering sanctioned SaaS applications and identifying misconfigurations. However, they often lack visibility into browser-based “shadow AI” usage and cannot control user interactions within the application itself.

- Data Loss Prevention (DLP): Traditional DLP solutions can be configured to block sensitive data patterns, but they often lack the contextual understanding of modern web applications. They may struggle to differentiate between a legitimate and a risky interaction within a GenAI chat interface, leading to either false positives that disrupt workflows or missed threats.

- Enterprise Browser Extensions: This emerging category represents a more effective approach. A security-focused browser extension, like the one offered by LayerX, operates directly within the browser. This provides granular visibility and control over user activity on any website, including GenAI platforms. This solution allows security teams to monitor all user interactions, such as pastes, form submissions, and uploads, and enforce policies in real time. For example, a policy could prevent an employee from pasting text identified as “source code” into a public LLM, effectively mitigating the risk of IP leakage without blocking the tool entirely. This makes the browser extension a powerful tool for implementing the controls defined in your AI security risk assessment.

Ultimately, the most effective strategy often involves using AI for risk assessment in a broader sense, leveraging intelligent tools to automate discovery and monitoring, while using a solution like LayerX to enforce granular, context-aware policies at the point of risk: the browser.

Best Practices for a Sustainable AI Risk Assessment Program

A successful GenAI security strategy goes beyond frameworks and tools; it requires a cultural shift and a commitment to continuous improvement. The following best practices can help ensure your AI risk assessment program is both effective and sustainable.

- Establish a Cross-Functional AI Governance Committee: AI risk is not just a security problem; it’s a business problem. Your governance team should include representatives from Security, IT, Legal, Compliance, and key business units. This ensures that risk decisions are balanced with business objectives and that policies are practical to implement.

- Develop a Clear Acceptable Use Policy (AUP): Employees need clear guidance. The AUP should explicitly state which AI tools are sanctioned, the types of data that are permissible to use with them, and the user’s responsibilities for secure usage. This policy should be a direct output of your risk assessment process.

- Prioritize Continuous User Education: Your employees are the first line of defense. Training should move beyond annual compliance modules and focus on real-world scenarios. Use real-time “teachable moments”; for instance, a pop-up warning when a user attempts to paste sensitive data, to reinforce secure behaviors.

- Adopt a Risk-Based, Granular Approach: Instead of blocking all AI, which can stifle innovation, use your risk assessment to apply granular controls. Allow low-risk use cases while enforcing strict controls on high-risk activities. For example, permit the use of a public GenAI tool for marketing copy but block its use for analyzing financial data. This nuanced approach is only possible with a tool that provides deep visibility into user actions.

- Integrate Technology for Real-Time Enforcement: Policy and training are essential but insufficient on their own. Technology is required to enforce the rules. An enterprise browser extension provides the technical backbone for your AUP, translating written policy into real-time prevention and making your AI risk assessment an active defense mechanism rather than a passive document.

Secure Your AI-Powered Future with Proactive Risk Management

Generative AI offers transformative potential, but realizing its benefits safely requires a proactive and structured approach to managing its risks. By implementing a robust AI risk assessment framework, utilizing a practical template, and deploying the right enforcement tools, organizations can build a secure bridge to an AI-powered future.

The journey begins with visibility and moves to control. Understanding where and how GenAI is being used is the first step. LayerX provides the critical visibility and granular control needed to turn your AI risk assessment from a checklist into a dynamic defense system, allowing your organization to innovate confidently and securely.