The integration of Generative AI into enterprise workflows is not a future-tense proposition; it’s happening right now, at a pace that often outstrips security and governance capabilities. For every documented, sanctioned use of an AI tool that boosts productivity, there are countless instances of “shadow” usage, exposing organizations to significant threats. The challenge for security analysts, CISOs, and IT leaders is clear: how do we enable the innovation AI promises without inviting unacceptable risks? The answer lies in a disciplined, proactive approach to AI risk management. This isn’t about blocking progress; it’s about building guardrails that allow your organization to accelerate safely.

The Growing Necessity of AI Governance

Before any effective risk strategy can be implemented, a foundation of AI governance must be established. The rapid, decentralized adoption of AI tools means that without a formal governance structure, organizations are operating in the dark. Employees, eager to improve their efficiency, will independently adopt various AI platforms and plugins, often without considering the security implications. This creates a complex web of unsanctioned SaaS usage, where sensitive corporate data; from PII and financial records to intellectual property; can be unintentionally exfiltrated to third-party Large Language Models (LLMs).

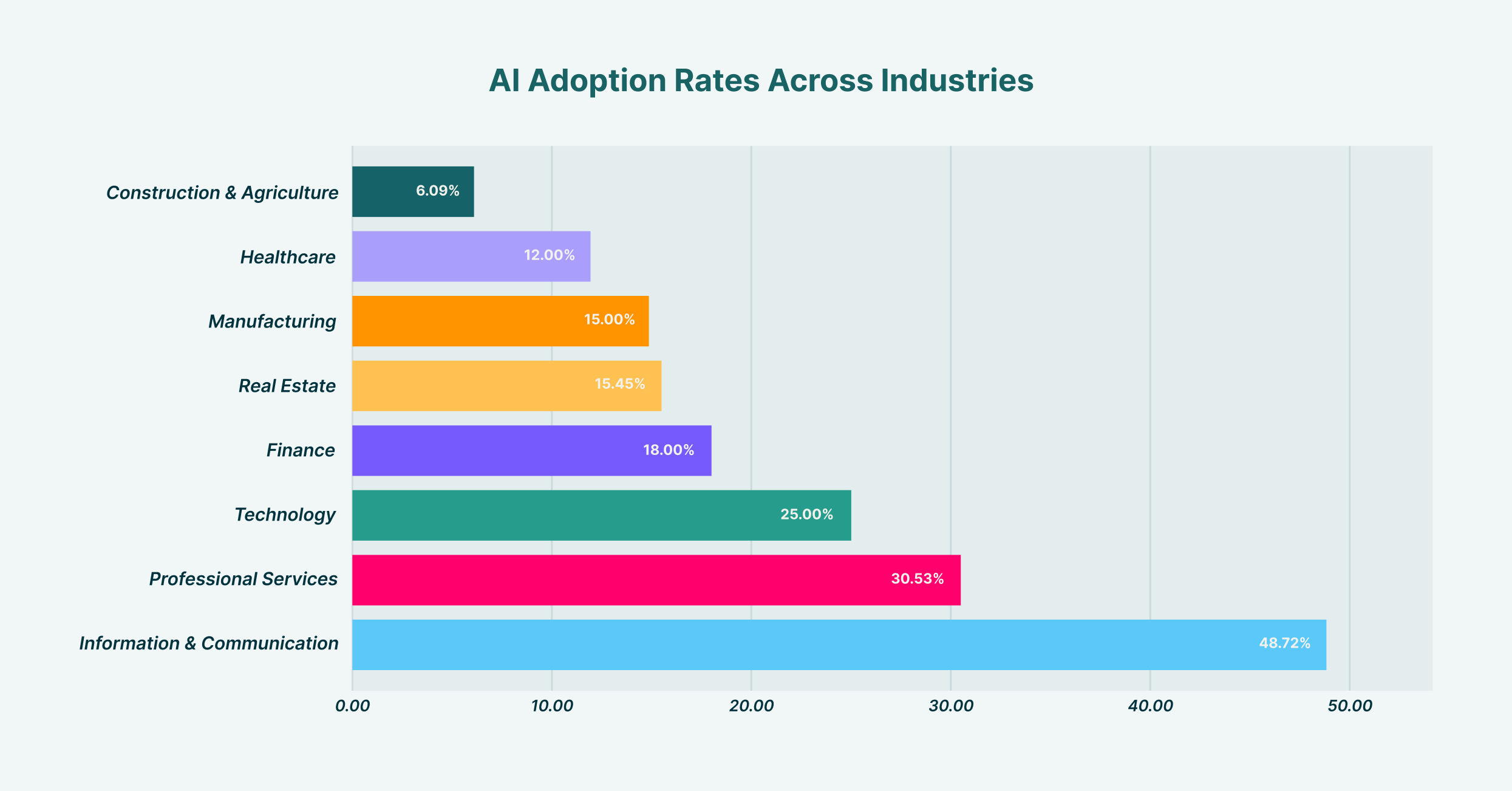

AI adoption varies significantly across industries, with Information & Communication leading at 48.7% and Construction & Agriculture at the lowest with 6.1%

Establishing robust AI governance involves creating a cross-functional team, typically including representatives from IT, security, legal, and business departments. This committee is tasked with defining the organization’s stance on AI. What is our risk appetite? Which use cases are encouraged, and which are forbidden? Who is accountable when an AI-related incident occurs? Answering these questions provides the clarity needed to build policies and controls. Without this top-down strategic direction, any attempt at managing risk becomes a series of disjointed, reactive measures rather than a cohesive defense. This governance framework becomes the blueprint for all subsequent security efforts, ensuring that technology, policy, and user behavior are aligned.

Building Your AI Risk Management Framework

With a governance structure in place, the next step is to construct a formal AI risk management framework. This framework operationalizes your governance principles, turning high-level strategy into concrete, repeatable processes. It provides a structured method for identifying, assessing, mitigating, and monitoring AI-related risks across the organization. Rather than reinventing the wheel, organizations can adapt established models, such as the NIST AI Risk Management Framework, to fit their specific operational context and threat landscape.

The development of an effective AI risk management framework should be a methodical process. It begins with creating a comprehensive inventory of all AI systems in use; both sanctioned and unsanctioned. This initial discovery phase is critical; you cannot protect what you cannot see. Following discovery, the framework should outline procedures for risk assessment, assigning scores based on factors like the type of data being processed, the model’s capabilities, and its integration with other critical systems. Mitigation strategies are then designed based on this assessment, ranging from technical controls and user training to outright prohibition of high-risk applications. Finally, the framework must include a cadence for continuous monitoring and review, because both the AI ecosystem and your organization’s use of it will constantly evolve. There are various AI risk management frameworks, but the most successful ones are those that are not static documents but living, breathing components of the organization’s security program.

Categorizing AI Risks: From Data Exfiltration to Model Poisoning

A core component of AI and risk management is understanding the specific types of threats you face. The risks are not monolithic; they span a spectrum from data privacy breaches to sophisticated attacks against the AI models themselves. One of the most immediate and common threats is data leakage. Imagine a marketing analyst pasting a list of high-value leads, complete with contact information, into a public GenAI tool to draft personalized outreach emails. In that moment, sensitive customer data has been exfiltrated and is now part of the LLM’s training data, outside of your organization’s control and in potential violation of data protection regulations like GDPR or CCPA.

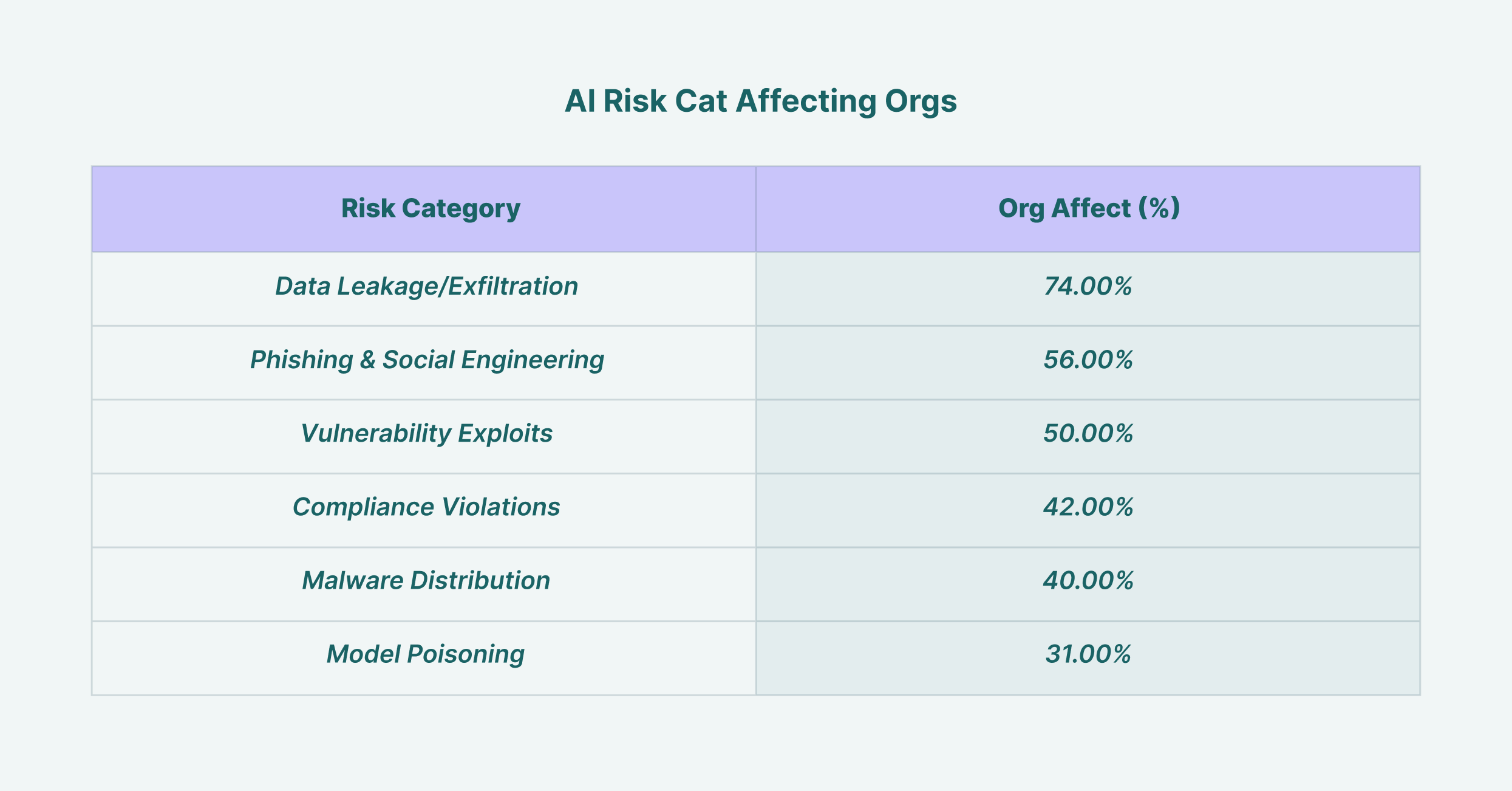

Data leakage affects 74% of organizations, making it the most prevalent AI security risk, followed by phishing attacks at 56%

Beyond data leakage, security leaders must contend with more advanced threats. Model poisoning occurs when an attacker intentionally feeds a model malicious data during its training phase, causing it to produce biased, incorrect, or harmful outputs. Evasion attacks involve crafting inputs that are specifically designed to bypass an AI system’s security filters. For CISOs, the effective use of AI in risk management also means leveraging AI-powered security tools to detect these very threats. Advanced threat detection systems can analyze user behavior and data flows to identify anomalous activities indicative of an AI-related security incident, turning the technology from a source of risk into a component of the solution.

The Critical Role of an AI Security Policy

To translate your framework into clear directives for your employees, a dedicated AI security policy is non-negotiable. This document serves as the authoritative source on the acceptable use of AI within the organization. It should be clear, concise, and easily accessible to all employees, leaving no room for ambiguity. A well-crafted AI security policy moves beyond simple “dos and don’ts” and provides context, explaining why certain restrictions are in place to foster a culture of security awareness rather than mere compliance.

The policy must explicitly define several key areas. First, it should list all sanctioned and approved AI tools, along with the process for requesting the evaluation of a new tool. This prevents the proliferation of shadow AI. Second, it must establish clear data handling guidelines, specifying what types of corporate information (e.g., public, internal, confidential, restricted) can be used with which category of AI tools. For example, using a public GenAI tool for summarizing publicly available news articles might be acceptable, but using it to analyze confidential financial projections would be strictly forbidden. The policy should also outline user responsibilities, consequences for non-compliance, and the incident response protocol for suspected AI-related breaches, ensuring everyone understands their role in safeguarding the organization.

Evaluating Models and Plugins: A Focus on AI Third-Party Risk Management

The modern AI ecosystem is built on a complex supply chain of models, platforms, and plugins developed by third parties. This reality makes AI third party risk management a critical pillar of your overall security strategy. Every time an employee enables a new plugin for their AI assistant or your development team integrates a third-party API, they are extending your organization’s attack surface. Each of these external components carries its own set of potential vulnerabilities, data privacy policies, and security postures, which are now inherited by your organization.

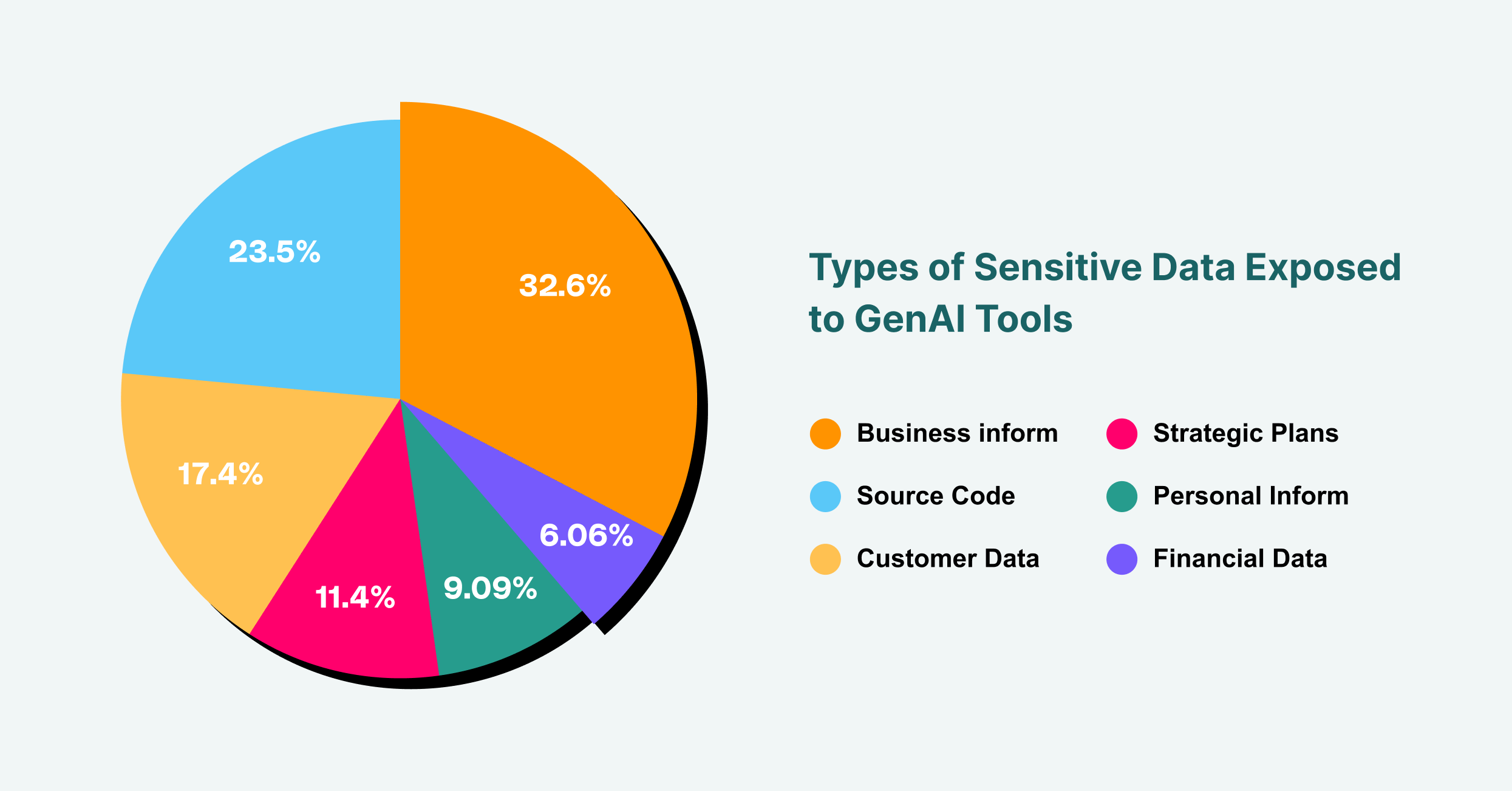

Business information accounts for 43% of sensitive data exposures to GenAI, followed by source code at 31% and customer data at 23%

A rigorous evaluation process is therefore essential. Before any third-party AI tool or component is approved for use, it must be subjected to a thorough security and privacy review. This involves scrutinizing the vendor’s security certifications, data handling practices, and incident response capabilities. What data does the tool collect? Where is it stored, and who has access to it? Does the vendor have a history of security breaches? For AI plugins, which are a growing vector for browser-based attacks, the vetting process should be even more stringent. Questions to ask include: What permissions does the plugin require? Who is the developer? Has its code been audited? By treating every third-party AI service with the same level of scrutiny as any other critical vendor, you can mitigate the risk of a supply chain attack compromising your organization.

Implementing AI Risk Management Tools

Policy and process are foundational, but they are insufficient without technical enforcement. This is where AI risk management tools become essential. These solutions provide the visibility and control necessary to ensure that your AI security policy is being followed in practice, not just in theory. Given that the primary interface for most users interacting with GenAI is the web browser, tools that can operate at this layer are uniquely positioned to provide effective oversight.

Enterprise browser extensions or platforms, like LayerX, offer a powerful mechanism for AI risk management. They can discover and map all GenAI usage across the organization, providing a real-time inventory of which users are accessing which platforms. This visibility is the first step in shutting down shadow AI. From there, these tools can enforce granular, risk-based guardrails. For instance, you could configure a policy that prevents users from pasting text identified as “confidential” into a public AI chatbot, or that warns users before they upload a sensitive document. This layer of protection monitors and controls the flow of data between the user’s browser and the web, effectively acting as a Data Loss Prevention (DLP) solution specifically tailored for the age of AI. The right AI risk management tools bridge the gap between policy and reality, providing the technical means to enforce your governance decisions.

Incident Handling and Response in the Age of AI

Even with the best preventative measures, incidents can still occur. How your organization responds is a defining factor in minimizing the impact of a breach. An effective incident response plan for AI must be both specific and well-rehearsed. When an alert is triggered, whether from a user report or an automated detection by one of your security tools, the response team needs a clear playbook to follow.

The first step is containment. If a user has inadvertently leaked sensitive data to an LLM, the immediate priority is to revoke access and prevent further exposure. This could involve temporarily disabling the user’s access to the tool or even isolating their machine from the network. The next phase is investigation. What data was leaked? Who was responsible? How did our controls fail? This forensic analysis is crucial for understanding the root cause and preventing a recurrence. Finally, the plan must address eradication and recovery, which includes notifying affected parties as required by law, taking steps to have the data removed by the AI vendor if possible, and updating security policies and controls based on the lessons learned. A mature AI and risk management posture means being just as prepared to respond to an incident as you are to prevent one.

Tracking and Improving Your AI Risk Posture

AI risk management is not a one-time project; it is a continuous cycle of assessment, mitigation, and improvement. The threat landscape is dynamic, with new AI tools and attack vectors emerging constantly. Therefore, tracking your organization’s AI risk posture over time is essential for ensuring your defenses remain effective. This requires a commitment to ongoing monitoring and the use of metrics to quantify your level of risk and the performance of your controls.

Key Performance Indicators (KPIs) can include the number of unsanctioned AI tools detected, the volume of data leakage incidents prevented, and the percentage of employees who have completed AI security training. Regular audits and penetration testing, specifically focused on AI systems, can also provide invaluable insights into weaknesses in your defenses. By continuously measuring and refining your approach, you create a resilient security program that adapts to the evolving challenges of the AI-driven world. This proactive stance ensures that your organization can continue to harness the power of AI confidently and securely, transforming a potential source of catastrophic risk into a well-managed strategic advantage.