LayerX Research identified a coordinated set of Chrome browser extensions marketed as ChatGPT enhancement and productivity tools. In practice, however, these extensions are meant to steal users’ ChatGPT identities. The campaign consists of at least 16 distinct extensions developed by the same threat actor, in order to reach as wide a distribution as possible.

This campaign coincides with a broader trend: the rapid growth in adoption of AI-powered browser extensions, aimed at helping users with their everyday productivity needs. While most of them are completely benign, many of these extensions mimic known brands to gain users’ trust, particularly those designed to enhance interaction with large language models. As these extensions increasingly require deep integration with authenticated web applications, they introduce a materially expanded browser attack surface.

Our analysis shows that extensions in this campaign implement a common mechanism that intercepts ChatGPT session authentication tokens and transmits them to a third-party backend. Possession of such tokens provides account-level access equivalent to that of the user, including access to conversation history and metadata. As a result, attackers can replicate the users’ access credentials to ChatGPT and impersonate them, allowing them to access all of the user’s ChatGPT conversations, data, or code.

This discovery underscores the need for corporations to monitor and restrict the use of 3rd-party AI extensions, as they may be stealing sensitive information.

While these extensions do not exploit vulnerabilities in ChatGPT itself, their design enables session hijacking and covert account access, representing a significant security and privacy risk.

Currently, approximately 900 downloads are associated with this campaign — a drop in the bucket compared to GhostPoster or RolyPoly VPN. However, while the scope of an attack is an obvious indicator of its relevance, it’s not the only one. GPT Optimizers are popular and there are enough highy-rated, legitmate ones on the Chrome Web Store that people could easily miss any warning signs, and one of the variants has a “featured” logo that states it “following recommended practies for Chrome extensions.”

It just takes one iteration for a malicious extenstion to become popular. We believe that GPT optmizers will soon become as popular as (not more than) VPN extnesions, which is why we prioritized the publication of this analysis. Our goal is to shut it down BEFORE it hits critical mass.

AI Browser Extensions as an Emerging Attack Surface

AI-focused browser extensions have become a common workflow component for users seeking productivity gains from generative AI platforms. These tools often require:

- Access to authenticated AI services

- Tight coupling with complex single-page applications

- Elevated execution contexts within the browser

As a result, AI extensions are uniquely positioned to observe sensitive runtime data, including authentication artifacts. This combination of high privilege, user trust, and rapid adoption makes them an increasingly attractive vector for abuse.

The extensions analyzed in this research demonstrate how legitimate-looking AI tooling can be leveraged to obtain persistent access to user accounts without exploiting software vulnerabilities or triggering conventional security controls.

Technical Analysis

Session Token Interception and Exfiltration

The primary security issue identified across the campaign is ChatGPT session token interception.

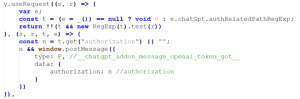

Across all analyzed variants (with one exception), the extensions implement the following workflow:

- A content script is injected into chatgpt.com and executed in the page’s MAIN JavaScript world.

- The script hooks the browser’s window.fetch function, allowing it to observe outbound requests initiated by the ChatGPT web application.

Figure 1. Fetch API Hooking

- When a request containing an authorization header is detected, the session token is extracted.

Figure 2. Authorization Token Extraction

- A second content script receives this message and transmits the token to a remote server.

This approach allows the extension operator to authenticate to ChatGPT services using the victim’s active session and obtain all users’ history chats and connectors (the users’ Google drive, Slack, Git-hub and other sensitive data sources).

MAIN World Script Execution

Extension’s interception content script executed in the MAIN world:

Figure 3. MAIN World Setting for Content Script

Executing content scripts in the MAIN JavaScript world enables direct interaction with the page’s native runtime, rather than operating within Chrome’s isolated content-script environment.

Specifically, this means the extension code:

- Runs in the same execution context as the web application itself

- Has access to the same JavaScript objects, functions, and in-memory state used by the page

- Can override or wrap native APIs (e.g., window.fetch, XMLHttpRequest, Promise, application-defined functions)

- Can observe or manipulate runtime data that never traverses the network or DOM, including:

- authentication headers before transmission

- in-memory tokens and session artifacts

- application state objects used by the frontend framework

Data Exposure Beyond the Token

In addition to the ChatGPT session token, the following data is sent to the third-party server:

- Extension metadata (version, locale, client identifiers)

- Usage telemetry and event data

- Backend-issued access tokens used by the extension service

this data allows the attacker to further expand access tokens and enables persistent user identification, behavioral profiling, and long-lived access to third-party services. When combined, these data elements can be used to correlate activity across sessions, infer usage patterns, and maintain ongoing access beyond a single browser interaction, increasing both the privacy impact and the potential blast radius of any misuse or compromise of the supporting infrastructure.

Campaign Scope and Distribution

Of the 16 identified extensions in this campaign, 15 were distributed through the Chrome Web Store, while one extension was published via the Microsoft Edge Add-ons marketplace. At the time of writing, all identified extensions remain available in their respective stores.

Most extensions in the campaign show relatively low individual installation counts, with only a small subset reaching higher adoption. We hope at LayerX that with this publication, the campaign is stopped at an early stage with minimal impact.

Infrastructure and Campaign Indicators

Several indicators suggest these extensions are part of a single coordinated campaign, rather than independent development efforts:

- A shared, minified codebase reused across multiple extension IDs

- Consistent publisher characteristics, despite the use of multiple listings

- Highly similar icons, branding, and descriptions

Figure 4. Visual Similarities

- Batch uploads, with multiple extensions published on the same dates

- Synchronized update timelines, with several extensions updated concurrently

- Shared backend infrastructure, with all extensions communicating with the same domain

- Overlapping legitimate functionality, reinforcing perceived trustworthiness

Early Detection via Extension Intelligence

LayerX Research was able to identify and attribute this campaign at an early stage through a combination of AI-driven browser extension detection and code similarity analysis.

Specifically, our detection capabilities enabled:

- Identification of shared, minified code artifacts across multiple extension IDs

- Correlation of extensions with near-identical runtime behavior, despite differing names and feature descriptions

- Recognition of variant proliferation patterns, where multiple extensions with overlapping functionality are published and updated in coordinated batches

These signals allowed us to cluster the extensions into a single campaign before widespread adoption, highlighting the importance of proactive visibility into browser extension ecosystems as AI tooling continues to expand.

Conclusion

This research highlights how browser extensions targeting AI platforms can be leveraged to achieve account-level access through legitimate session mechanisms, without exploiting vulnerabilities or deploying overt malware.

By combining MAIN-world execution with authentication token interception, the operators obtained persistent access to user accounts while remaining within the boundaries of standard web behavior. Such techniques are particularly difficult to detect using traditional endpoint or network security tools.

As AI platforms continue to be integrated into enterprise and personal workflows, browser extensions interacting with authenticated AI services should be treated as high-risk software and subjected to rigorous scrutiny.

Indicators of Compromise (IOCs)

Extensions

| ID | Extension Name | Installs |

| lmiigijnefpkjcenfbinhdpafehaddag | ChatGPT folder, voice download, prompt manager, free tools – ChatGPT Mods | 605 |

| obdobankihdfckkbfnoglefmdgmblcld | ChatGPT voice download, TTS download – ChatGPT Mods | 156 |

| kefnabicobeigajdngijnnjmljehknjl | ChatGPT pin chat, bookmark – ChatGPT Mods | 18 |

| ifjimhnbnbniiiaihphlclkpfikcdkab | ChatGPT message navigator, history scroller – ChatGPT Mods | 11 |

| pfgbcfaiglkcoclichlojeaklcfboieh | ChatGPT model switch, save advanced model uses – ChatGPT Mods | 11 |

| hljdedgemmmkdalbnmnpoimdedckdkhm | ChatGPT export, Markdown, JSON, images – ChatGPT Mods | 10 |

| afjenpabhpfodjpncbiiahbknnghabdc | ChatGPT Timestamp Display – ChatGPT Mods | 13 |

| gbcgjnbccjojicobfimcnfjddhpphaod | ChatGPT bulk delete, Chat manager – ChatGPT Mods | 11 |

| ipjgfhcjeckaibnohigmbcaonfcjepmb | ChatGPT search history, locate specific messages – ChatGPT Mods | 11 |

| mmjmcfaejolfbenlplfoihnobnggljij | ChatGPT prompt optimization – ChatGPT Mods | 10 |

| lechagcebaneoafonkbfkljmbmaaoaec | Collapsed message – ChatGPT Mods | 13 |

| nhnfaiiobkpbenbbiblmgncgokeknnno | Multi-Profile Management & Switching – ChatGPT Mods | 0 |

| hpcejjllhbalkcmdikecfngkepppoknd | Search with ChatGPT – ChatGPT Mods | 0 |

| hfdpdgblphooommgcjdnnmhpglleaafj | ChatGPT Token counter – ChatGPT Mods | 5 |

| ioaeacncbhpmlkediaagefiegegknglc | ChatGPT Prompt Manager, Folder, Library, Auto Send – ChatGPT Mods | 5 |

| jhohjhmbiakpgedidneeloaoloadlbdj | ChatGPT Mods – Folder Voice Download & More Free Tools | 17 |

Domains

chatgptmods.com

Imagents.top

Emails

support@imagents.top

Tactics, Techniques, and Procedures (TTPs)

| Tactic | Technique |

| Defense Evasion | LX7.011 (T1036) – Masquerading |

| Defense Evasion | LX7.003 (T1140) – Code Obfuscation/Deobfuscation |

| Credential Access | LX8.004 (T1528) – Steal Application Access Token |

| Execution | LX4.006 – Method Hijacking |

Recommendations

Security professionals, enterprise defenders, and browser developers should take the following actions:

- Classify AI-Integrated Extensions as Privileged Applications – Extensions that integrate with authenticated AI platforms should be treated as high-risk, privileged software, as their access to runtime state and authentication artifacts exceeds that of typical browser add-ons.

- Deploy behavior-based extension monitoring technologies to detect unauthorized network activity or suspicious DOM manipulation.