With ChatGPT being 1.16 billion users strong, it has become an inseparable part of many people’s lives. This significant user base requires security and IT teams to find and implement a solution in their stack that can protect the organization from its potential security vulnerabilities.

This is not a hypothetical risk; numerous reports of potential cybersecurity breaches involving ChatGPT have already been published. In this blog post, we explore these risks and find out which security solution can help protect from the data leakage vulnerability.

To read more about how LayerX browser security solution can protect your organization from potential data leakage that could result from employee use of ChatGPT or other Generative AI tools. Learn more here

ChatGPT’s Data Protection Blind Spots

The infamous Samsung ChatGPT leak (which is quickly becoming the ‘SolarWinds’ of generative AI cybersecurity breaches) has become a representative example of the cybersecurity vulnerabilities that plague generative AI platforms. In the Samsung debacle, engineers pasted proprietary code in ChatGPT. Since everything pasted in ChatGPT could be used for training and for the answers given to other users, these engineers potentially share confidential code with any competitors who know how to ask ChatGPT the right questions.

ChatGPT might seem like an objective, productivity-driving tool. However, it’s actually a potential data-leakage channel. Incidentally, when asking ChatGPT if it has the potential for data leakage, the answer is “As an AI language model, I do not have any inherent motivation or ability to leak data. However, any system that stores data has a potential risk of data leakage or other security vulnerabilities…”

Security researchers have already demonstrated how ChatGPT and its APIs can be exploited to write malware, how ChatGPT bugs created personal data leaks, and even how prompts can be used to extract sensitive information shared by other users. Given that Generative AI platforms are poised to gain greater popularity in the upcoming years, it’s up to security stakeholders and teams to secure their organization from the possibility of their crown jewel information being leaked onto the public internet, due to human error, fallacy, or just plain naivety.

Where DLP and CASB Fall Short

Traditionally, enterprises relied on DLP (Data Leak Prevention) solutions to protect sensitive organizational data from unauthorized access or distribution. DLPs monitor the flow of sensitive data in the organization. Once they identify a policy being breached, for example an unauthorized user attempting to access a restricted resource, the DLP will take action and respond accordingly. This could include blocking the data or alerting administrators. CASB solutions might also be used, to shield sensitive data from being transmitted outside the organization.

However, both DLP and CASB solutions are file-oriented. This means that while they prevent actions like data modifying, download, or sharing, this prevention is applied on files. With employees typing or pasting data into the browser on the ChatGPT website, DLPs and CASBs are of no use. These browser-based actions are beyond their governance and control.

What is Browser Security?

Browser security is the technologies, tools, platforms, and practices that enable access to SaaS applications and websites while safeguarding the organizational systems and data. By utilizing a browser security solution, companies can detect and prevent web-based threats and risks that target the browser or use it as an attack vector. Such threats include malware, social engineering, data theft, and data exfiltration.

Unlike networking and endpoint security solutions, browser security platforms focus on live web session activity, which encompasses the actual non-encrypted web pages that browsers render and display. Browser security solutions operate by identifying and blocking web-borne threats, through activity policies, preventing data sharing, enforcing least-privilege, providing visibility, detecting malicious activities, and securing both managed and BYOD/third-party devices. As such, they can be used for securing activities in ChatGPT.

How the LayerX Browser Security Solution Prevent Data Leaks to ChatGPT

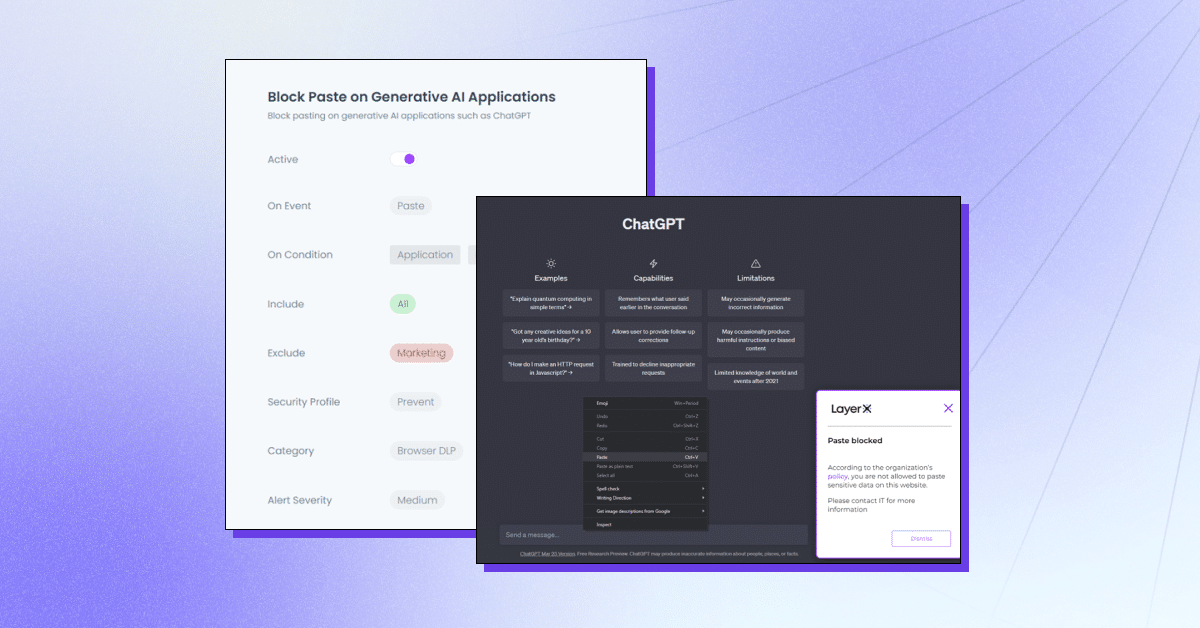

LayerX is a user-first browser security platform that works with any commercial browser, turning it into the most protected and manageable workspace. LayerX continuously monitors and analyzes browser sessions to enforce their protection in real-time. Through granular visibility into every event that takes place in the session, layerX can single out text insertion events. All security teams need to do is define a policy that limits or blocks this ability with the ChatGPT tab. This policy can be all-encompassing, i.e preventing use of ChatGPT altogether, or for specific strings.

LayerX’s offers multiple capabilities to protect from ChatGPT data leakage:

- Preventing data pasting or submission – Ensuring employees don’t add sensitive information into the Generative AI platform.

- Detecting sensitive data typing – Identifying when employees are about to submit sensitive data to ChatGPT and restricting it.

- Warning mode – Participating in employee training while they are using ChatGPT by adding “safe use” guidelines.

- Site blocking or disabling – Preventing the use of ChatGPT altogether or ChatGPT-like browser extensions.

- Require user consent/justification – Ensuring the use of a generative AI tool is approved.

LayerX will protect your organization from potential data leakage that could result from employee use of ChatGPT or other Generative AI tools. Learn more here.