In an era where Artificial Intelligence (AI), and specifically Generative AI (GenAI), is fundamentally transforming the enterprise ecosystem, establishing strong governance frameworks is more crucial than ever. The introduction of ISO 42001, the first international standard for AI management systems, marks a pivotal step in aligning AI deployment with globally recognized best practices. This standard offers a structured path for organizations to manage AI systems responsibly, mitigate risks, ensure compliance, and foster ethical innovation. For security analysts, CISOs, and IT leaders, understanding this new standard is not just about compliance; it’s about future-proofing your AI strategy.

Implementing the ISO 42001 AI standard aligns your enterprise with international benchmarks and enhances trust among stakeholders, customers, and regulators. As AI continues to evolve, its role becomes increasingly significant in establishing a resilient and compliant AI ecosystem. This article explores the core requirements of ISO 42001, provides practical steps for implementation, and shows how organizations can utilize this framework for effective AI governance and competitive advantage.

Understanding the ISO 42001 AI Standard

So, what exactly is ISO/IEC 42001? It is a management system standard designed to help organizations establish, implement, maintain, and continually improve an AI Management System (AIMS). Think of it as the AI equivalent of the well-known ISO 27001 for information security management. It doesn’t prescribe specific technical solutions but instead provides a comprehensive framework for governing AI initiatives throughout their lifecycle.

The primary goal of ISO 42001 is to ensure that AI systems are developed and used in a responsible, ethical, and transparent manner. It provides a structure for identifying and managing risks associated with AI, from data privacy and bias to security vulnerabilities. This is especially critical with the rise of GenAI and the associated risks of data leakage and “shadow SaaS,” where employees use unsanctioned AI tools that fall outside the purview of IT security.

Why prioritize this now? The proliferation of GenAI tools has created a significant productivity boost. Still, it also exposes organizations to severe risks, such as the exfiltration of sensitive PII or corporate intellectual property to third-party Large Language Models (LLMs). The ISO 42001 AI standard provides the necessary guardrails to manage these new threat vectors effectively.

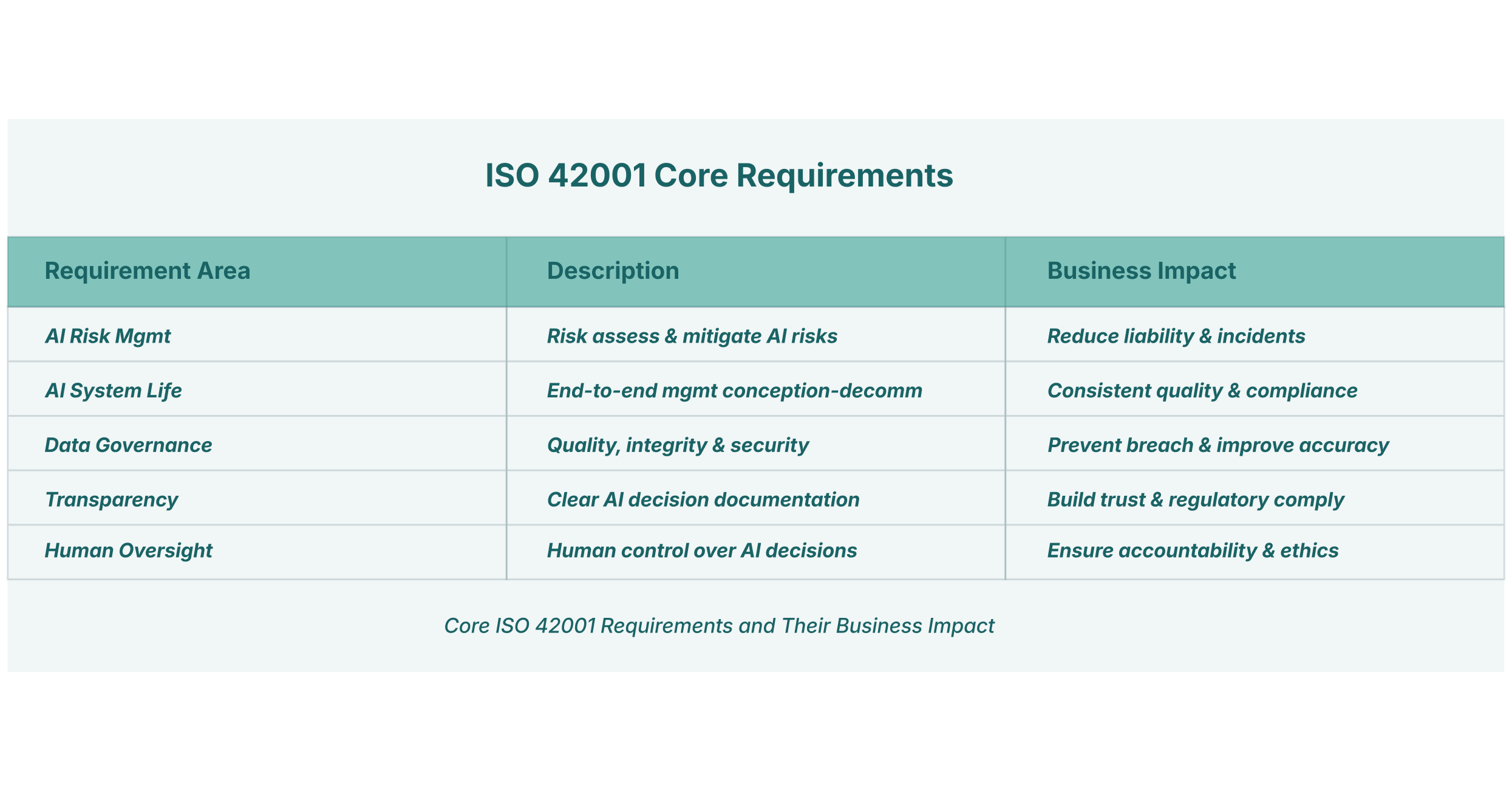

The Core Requirements of ISO 42001

To achieve ISO 42001 compliance, an organization must address several key areas. The standard is built on the same high-level structure used by other ISO management system standards, which simplifies integration with existing frameworks like ISO 27001 (Information Security) and ISO 9001 (Quality Management). The ISO 42001 requirements are comprehensive, focusing on creating a systematic approach to AI management.

AI Risk Management

A central component is the requirement for a structured AI risk assessment process. Organizations must identify, analyze, and evaluate risks related to their AI systems. This includes assessing potential impacts on individuals, society, and the organization itself. For example, a risk assessment would need to cover potential algorithmic bias, data poisoning attacks, and the consequences of AI system failures. This process must be continuous, as AI models and their operational contexts evolve.

AI System Lifecycle

The standard requires organizations to define and manage the entire lifecycle of their AI systems. This spans from initial conception and data acquisition through design, development, verification, validation, deployment, monitoring, and eventual decommissioning. Each stage must have defined processes and controls to ensure the AI system operates as intended and meets all ethical and legal requirements.

Data Governance

Data is the lifeblood of AI. The ISO 42001 requirements place a strong emphasis on data quality, integrity, and provenance. Organizations must have policies and procedures for managing the data used to train, validate, and operate AI systems. This includes ensuring data is relevant, representative, and protected against unauthorized access or modification, a critical factor in preventing the exfiltration of sensitive information through GenAI tools.

Transparency and Explainability

Stakeholders, including users and regulators, need to understand how AI systems make decisions. The standard mandates transparency, requiring organizations to provide clear information about their AI systems’ capabilities, limitations, and decision-making processes. While full explainability of complex models like LLMs can be challenging, the goal is to offer enough insight to build trust and allow for meaningful human oversight.

Human Oversight

ISO 42001 champions the principle that humans should always remain in control. Organizations must implement mechanisms for effective human oversight of AI systems. This could involve having a human-in-the-loop for critical decisions, providing clear interfaces for users to interact with and override AI suggestions, and establishing accountability structures for AI-driven outcomes.

Implementing ISO 42001: A Practical Guide

Achieving ISO 42001 compliance is a strategic initiative that requires commitment from leadership and collaboration across departments. It is not merely an IT or data science project but a business-wide effort to embed responsible AI practices into the organization’s DNA. Here are the practical steps to begin your journey.

First, start with a gap analysis. Compare your existing AI governance policies and practices against the ISO 42001 requirements. This will help you identify areas that need attention and develop a realistic implementation roadmap. An ISO 42001 checklist can be an invaluable tool at this stage, providing a structured way to assess your current state and track progress. You can find pre-made checklists or develop your own based on the standard’s clauses.

Second, establish a formal AI Management System (AIMS). This involves defining AI-related policies, objectives, roles, and responsibilities. Your AIMS should be integrated with other management systems to ensure a unified approach to governance and risk. This is where a strong AI governance framework becomes critical, setting the rules for how AI is used across the organization.

Third, focus on operationalizing the policies. This means implementing the technical and organizational controls needed to meet the standard’s requirements. For instance, to control GenAI usage, you need visibility into which employees are using which tools and what data is being shared. This is where solutions like LayerX become essential. By providing full audit capabilities for all SaaS applications, including “shadow” GenAI tools, LayerX helps enforce granular, risk-based guardrails over all SaaS usage, directly supporting the objectives of ISO 42001 AI governance.

Imagine a scenario: a product manager uses a free, unsanctioned GenAI-powered diagramming tool to create a roadmap containing sensitive, unreleased feature details. Without proper controls, this data could be used to train the tool’s public model, leading to a major data leak. A browser-native solution like LayerX can detect this activity, block the upload of sensitive data, and alert the security team, all without hindering the employee’s productivity with approved tools. This is a practical example of enforcing the data protection principles required by the standard.

ISO 42001 and GenAI: Governing the New Frontier

The rise of GenAI makes the principles of ISO 42001 more relevant than ever. GenAI tools introduce unique challenges, from “hallucinations” and unpredictable outputs to new avenues for data exfiltration. Effective AI governance for GenAI must address these specific risks.

The standard pushes organizations to map their GenAI usage, enforce security governance, and restrict the sharing of sensitive information. LayerX’s enterprise browser extension allows organizations to do exactly that. It provides the visibility needed to understand the scope of GenAI adoption, both sanctioned and unsanctioned, and the controls to enforce policies in real time. For example, you can create a policy that prevents employees from pasting internal source code into a public GenAI chatbot or uploading a confidential financial report for summarization.

By applying risk-based guardrails directly in the browser, organizations can achieve a state of continuous ISO 42001 compliance. This proactive stance is far more effective than reactive incident response. It ensures that as new GenAI tools emerge, the governance framework is already in place to assess their risk and apply appropriate controls, preventing the expansion of shadow IT and protecting corporate data where it is most vulnerable: at the point of interaction in the browser.

Challenges and Opportunities on the Path to Compliance

Embarking on the ISO 42001 journey presents both challenges and significant opportunities. The primary challenge is often organizational inertia and the complexity of retrofitting governance onto existing AI systems. It requires a cultural shift toward a mindset of “security and ethics by design.” Another hurdle is the resource investment needed to establish and maintain the AIMS.

However, the opportunities far outweigh the difficulties. Achieving ISO 42001 compliance is a powerful market differentiator. It signals to customers and partners that your organization is committed to responsible AI, building trust and enhancing your brand reputation. Internally, the process drives operational excellence by standardizing processes, improving data quality, and reducing the risk of costly AI-related incidents.

Furthermore, with regulations like the EU AI Act on the horizon, ISO 42001 provides a clear and globally recognized framework for demonstrating compliance. Organizations that adopt the standard now will be several steps ahead of their competitors when these regulations come into full force. They can turn a potential compliance burden into a competitive advantage, showcasing their maturity in AI governance.

The Future of AI Compliance and Governance

The ISO 42001 AI standard is not a final destination but a foundational element in the evolving ecosystem of AI regulation and governance. As AI technology continues to advance at a breakneck pace, we can expect the standard itself to evolve. It sets a precedent for a new generation of standards that will address more specific aspects of AI, such as algorithmic transparency, bias auditing, and AI supply chain security.

For organizations today, the path forward is clear. Adopting a structured approach to AI governance, guided by frameworks like ISO 42001, is no longer optional. It is a strategic imperative for any enterprise looking to harness the power of AI responsibly and sustainably. By using an ISO 42001 checklist to guide implementation and deploying advanced tools like LayerX to enforce policies at the browser level, organizations can build a resilient, compliant, and innovative AI-powered future.