The rapid integration of Generative AI into enterprise workflows presents a dual-edged sword. On one side, it offers unprecedented productivity gains; on the other, it opens up new vectors for data exfiltration, intellectual property leakage, and compliance violations. As employees increasingly turn to AI assistants for everything from code generation to content creation, security leaders are faced with a critical question: How do you govern the use of a technology that is decentralized, difficult to monitor, and evolves at a breakneck pace? The foundational answer lies in developing a dedicated AI risk register.

Traditional risk management frameworks were not designed for the unique challenges of AI. They fall short in addressing threats like prompt injection, shadow AI usage across thousands of unmanaged tools, and the inadvertent sharing of sensitive corporate data with public Large Language Models (LLMs). A specialized AI risk register is not merely a compliance checkbox; it is a critical, living document that provides the visibility and structure needed to manage the complex threat ecosystem of enterprise AI. It serves as the central nervous system for your AI audit framework, transforming abstract risks into quantifiable, manageable, and auditable entries.

Why a Standard Risk Register Isn’t Enough for GenAI

The nature of Generative AI introduces risks that are fundamentally different from those of traditional SaaS applications. Attempting to fit these new threats into an old framework is like trying to navigate a city with a map from a different country. The core deficiencies of standard registers when applied to AI include:

- Lack of Context: Traditional registers often fail to capture the specifics of the AI model, its training data, or its intended use case. A risk associated with using a public LLM for marketing copy is vastly different from using a fine-tuned model with access to proprietary code.

- Inadequate Threat Categories: GenAI introduces novel attack surfaces. Prompt injection attacks can manipulate an LLM into revealing sensitive data, while the use of insecure third-party plugins can create backdoors into corporate systems. These categories don’t exist in most standard risk templates.

- The “Shadow AI” Problem: The sheer volume of web-based GenAI tools makes manual tracking impossible. Employees often use unsanctioned or personal AI accounts, creating a massive visibility gap for security teams. A 2025 report found that nearly 90% of logins to GenAI tools are made with personal accounts, rendering them invisible to organizational identity systems.

- Data Exfiltration Vector: The primary interface for most GenAI tools is a simple prompt box, which has become a primary channel for data leakage. An employee might innocently paste a sensitive customer list or a pre-release financial report into an AI tool to summarize it, instantly sending that data outside the organization’s control.

A purpose-built AI risk register addresses these shortcomings by forcing a systematic evaluation of each AI use case, creating a clear line of sight from application to risk to mitigation.

Core Components of a High-Impact AI Risk Register

An effective AI risk register moves beyond simple descriptions. It is a detailed log that provides context, quantifies risk, assigns ownership, and tracks mitigation efforts. It should be structured to answer not just what the risk is, but where it comes from, who owns it, and what is being done about it. The following components are essential for creating a high-impact register.

o Risk ID: A unique identifier for tracking.

o AI Application & Use Case: Be specific. Instead of “ChatGPT,” document “ChatGPT-4 for generating external marketing blog posts” or “GitHub Copilot for Python code assistance in the R&D team”.

o Data Sensitivity: Classify the type of data the AI will interact with (e.g., Public, Internal, Confidential, Proprietary Source Code).

o Risk Description: A clear, concise statement of the potential negative outcome. For example, “Exfiltration of sensitive PII through prompts entered into a public, unvetted LLM.”

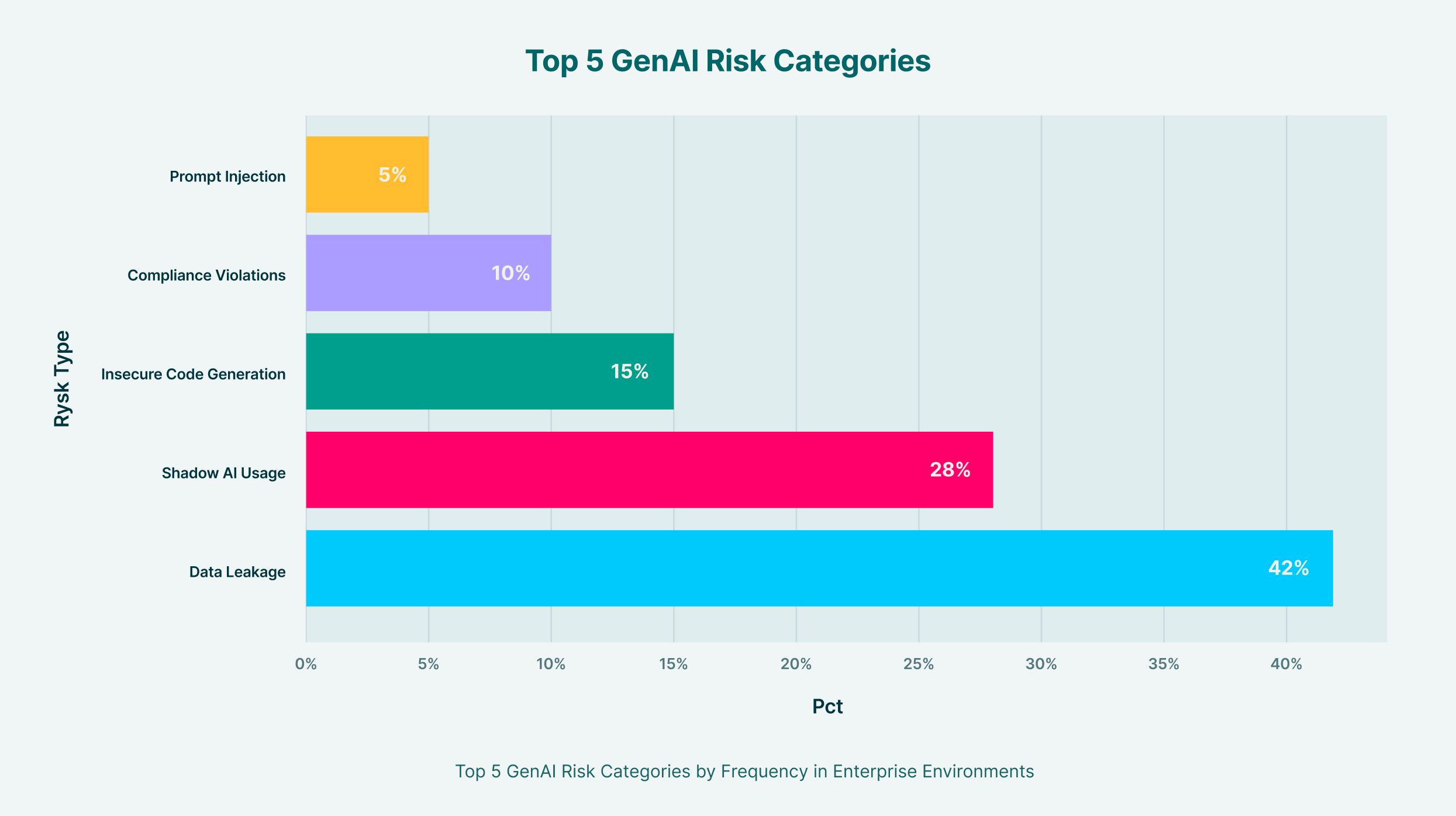

o Risk Category: Standardize categories to group threats. Key categories include Data Leakage, Shadow AI, Insecure Code Suggestions, Compliance Violations (GDPR, HIPAA), Algorithmic Bias, and Prompt Injection.

o Likelihood: The probability of the risk occurring (e.g., High, Medium, Low), based on factors like user access and existing controls.

o Impact: The potential business harm if the risk materializes (e.g., Critical, High, Medium, Low), considering financial, reputational, and operational consequences.

o Inherent Risk Score: A calculated score, often by multiplying likelihood and impact, to prioritize the most severe threats before controls are applied.

o Mitigation Controls: The specific technical or procedural actions taken to reduce the risk. This is where technology becomes critical. Examples include: “Block paste/upload of content matching ‘Project Titan’ regex pattern,” or “Enforce use of corporate accounts with SSO”.

o Risk Owner: The individual or team accountable for managing the risk, such as the CISO, a Data Science lead, or a business unit manager.

o Residual Risk Score: The risk level that remains after mitigation controls are implemented. This helps in demonstrating due diligence and risk acceptance.

o Status & Review Date: The current status of the risk (e.g., Open, Mitigated, Accepted) and a date for the next review. An AI risk register is a living document, not a one-time exercise.

AI Risk Register Template

To put this into practice, organizations can adapt the following template. It provides a structured format to begin documenting and tracking GenAI-related risks systematically. This template serves as a starting point for any generative AI risk assessment, forcing teams to evaluate the specific context of each tool’s usage.

- Risk ID: [Assign a Unique Identifier, e.g., AI-001]

- AI Application & Use Case: [Specify the tool and its business purpose]

- Risk Description: [Describe the potential threat or negative outcome]

- Data Sensitivity: [Classify the data involved: Public, Internal, Confidential, etc.]

- Likelihood: [High | Medium | Low]

- Impact: [Critical | High | Medium | Low]

- Inherent Risk: [Calculated score before mitigation]

- Mitigation Controls: [List the specific technical or policy actions taken]

- Risk Owner: [Assign the responsible individual or team]

- Residual Risk: [Risk level after controls are applied]

- Status: [Open | In Progress | Mitigated | Accepted]

- Next Review Date: [DD/MM/YYYY]

Integrating Your Register into a Wider AI Audit Framework

A GenAI risk log or register is most powerful when it functions as a core component of a comprehensive AI audit framework. Such a framework provides the governance structure to ensure AI is used responsibly and securely across the organization. It connects the tactical findings in your risk register to strategic business objectives and regulatory requirements. Key pillars of a robust AI audit framework include:

- AI Governance: Establish a cross-functional committee with members from security, legal, IT, and business units to oversee AI strategy and risk appetite. This body uses the AI risk register as its primary tool for decision-making.

- Policy & Standards: Develop a clear Acceptable Use Policy (AUP) for AI, defining sanctioned tools, permissible data types, and user responsibilities. Standards like ISO 42001 provide a formal blueprint for building a certifiable AI management system, and the risk register is a key artifact for demonstrating compliance.

- Auditability & Transparency: Your framework must ensure you can answer to regulators and stakeholders. This requires centralized logging of all AI interactions, including prompts, responses, user IDs, and timestamps, to create an unimpeachable audit trail. The GenAI risk log serves as the index for this evidence.

- Continuous Monitoring: The framework should mandate ongoing monitoring for policy violations, such as employees using personal AI accounts or attempting to share restricted data. This moves the framework from a static policy to an active defense.

Aligning with established models like the NIST AI Risk Management Framework provides a structured approach to mapping, measuring, and managing AI risks, with the register serving as the central repository for these activities.

From Static Document to Active Defense with LayerX

An AI risk register is only as good as the data within it and your ability to enforce its prescribed controls. Without comprehensive visibility into AI usage and the technical means for real-time policy enforcement, a risk register remains a theoretical exercise. This is where a solution like LayerX becomes indispensable, transforming the register from a passive document into an active defense mechanism.

LayerX operates as an enterprise browser extension, providing the deep visibility and granular control needed to populate, manage, and enforce your AI risk register directly at the point of interaction.

- Discover and Populate: You cannot secure what you cannot see. LayerX provides a complete audit of all SaaS and AI usage across the enterprise, automatically identifying both sanctioned tools and Shadow AI. This discovery capability directly populates the “AI Application” column of your register, eliminating the primary blind spot for most security teams.

- Analyze and Quantify Risk: By analyzing all user activities within AI tools, LayerX provides the context needed to assess likelihood and impact accurately. It reveals what data is being shared, by whom, and with which platforms, providing the evidentiary basis for the risk assessment.

- Enforce Mitigation Controls in Real-Time: This is the most critical link. The “Mitigation Controls” column in your register becomes an active policy set within LayerX. Whether it’s blocking the paste of sensitive data patterns, preventing file uploads to untrusted AI tools, restricting the use of risky browser extensions, or forcing the use of secure corporate accounts, LayerX enforces these rules in the browser before a policy violation can occur. When a user attempts a risky action, they can be blocked or receive a real-time warning, reinforcing security training at the moment of risk.

By integrating LayerX, your AI risk register is no longer a forecast of what could go wrong. It becomes a dynamic command center that reflects a true, up-to-the-minute picture of your AI security posture, backed by immediate and automated enforcement. This alignment of policy, visibility, and control is the cornerstone of a defensible and effective GenAI security program.